In the reason years cascades of agent models have been developed. Almost equally many general agent models have seen the light of day with the purpose to make all the others superfluous. No agent based computer game with self-respect omits some kind of artificial intelligence in its agents. However even today nobody is fooled by the artificial agents to believe they are actually human-beings and in the long run the human game-player learns how the out smart the agents.

In this chapter some few models are described. They have been chosen to represent some different approaches to modelling agents but also to give an idea of why my project is based on the Will-model, described later. Therefore I include personal remarks on benefits and drawbacks of the different models.

Computer scientist Bernd Schmidt has

designed a general purpose agent model called

Figure 5 , the

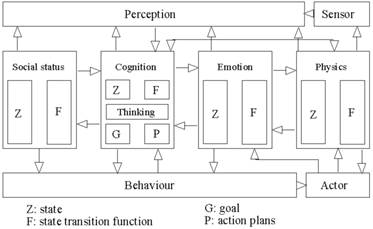

The agent architecture as shown in Figure 5, above, is divided into four main categories: social status, cognition, emotion, and physics (he calls it physis). Besides these there are a sensor and a perception category at the top and a kind of behaviour and an actor category at the bottom. I will to begin with list what is meant by these categories from the top and down:

The sensor receives input from the environment without any filtering or interpretation. This includes vision, hearing, touch, and anything else we might be aware of. Thus communication or signals from other agents are received through here. The sensor is not just a re-router of information, it also receives the physical state of the agent so it may itself filter or interpret information before the inputs are sent onwards in the system. This allows sensing abilities to vary from one individual to the other.

The perception component receives input from the sensor and processes it according to the agent's internal state. Information about the internal state reaches the perception component through connections from the four main categories (shown as arrows in Figure 5). An example of how the internal state influence perception is when you walk through a dark forest and you see a lurking monster hidden in the trees due to you expectation and fears. The information received by the perception component is then interpreted according to expectations and experience. Then this newly classified information is sent onwards in the system to the cognition component.

The cognition component has five separate components: the state and the state transition function are respectively the actual state of the cognition component and the possible changes in this state. A new state will then be the result of the present state and the input. The state transition function is connected to the thinking component. This is the reasoning governed by a set of if-then rules. Furthermore, the cognition component includes the goals and plans of the agent. I return to this when I discuss motivation and planning.

The emotion component has a state and a state transition function. As mentioned it is connected to the perception and may influence the agent in this way. It is also connected to the cognition and the physics components so they may mutually influence each other.

The physics component represents the physics of the agent. This would be his age, how hungry he is and so on. Thus the physics has a state to remember these data and some state transitions. Such a transition would describe how the physics of the agent change over time, for instance you grow hungrier.

The social status component keeps track of social relationships with other agents. This is social hierarchies, wealth, and also more informative things such as names and family. All this information is store in the state of the social status component and change due to some specific state transitions.

The behaviour component determines the

sequence of actions to do. The four main components, social status, cognition,

emotion, and physics, 15515b114p all send action-signals to this unit. The behaviour

component then has a subunit that determines the agent's deliberate behaviour

by comparing the actions to the goals. Thus the agent may be able to learn in

some way by making up new plans that combine the different actions requested by

the modules. The behaviour component also contains a subunit that handles

behavioural reactions. These are defined by the agent designer and are not

subject to any learning. Compared to the discussion in 2.4.1.3, on inbred versus learned behaviour, the reactions in

the

The actor component controls the execution of the actions chosen by the behaviour module. The actor transforms the internal ideas into environment specific actions and it also sends out signals to other agents. Thus it is in contact with the outsides world which consists of two elements: the connector and the environment.

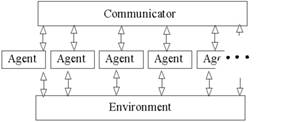

The world of

The environment models all things that are

not agents. That could be buildings, trees and other objects according to what

kind of model we are making. The

The connector is a general mechanism to

communicate between agents. Instead of leaving pheromones like ants [19] or modifying to environment by emitting sounds, or

change their own appearance with gestures the agents in

Figure 6 , the

As mentioned about the behaviour module the

agent may promote actions due to different reasons. Sometimes the behaviour

component receives information that fits a predetermined reaction-pattern and

thus the agent will instinctively submit to this action without any thought

process. Schmidt calls these instinctive

reactions. Some reactions may be learned through growth or due to the

environment. In an environment with cars people will learn to hit the brakes

instantly if a child runs out in front of them. These are learned reactions. Drive-controlled

behaviour has the purpose to fulfil some of the agents needs. The drive

intensity is the result of how great the need is, environmental influence, and

other influences such as curiosity. Drive-controlled behaviour is what we see

in swarm intelligence such as ants (see [19]). Lastly some behaviour is emotionally-controlled and is primarily the result of some external

stimulation. For instance a lion will evoke fear in the agent and might cause

him to run away. In

Some actions are not simply reactions but may be considered to have a deliberative goal. Therefore the agent may also make plans for how to behave most suiting in the situation to fulfil the goal. For instance if an agent is hungry it might often be a good idea to choose the best food source. Each possible action is given an "act-of-will-value" and the action with highest value always wins. For the planning, the agent makes an internal model of the environment. He inspects this model to make the best plan but if the model has shown to be wrong his plan may fail and evoke feelings of failure.

The

The agent may wander into a dangerous square if he has not visited it before. Then he gets frightened and will for some time look at squares before he enters them. This is the main influence of emotions in the model.

Lastly in my short survey of the Adam model, the cognitive component of the agent re-evaluates the plan for every step in the simulation. In this way opportunism is integrated into the model. On the other hand the model does not allow the agent to stop in the middle of an action, so for instance a short sudden event could go on unattended.

The idea of internal maps is very nice and

also the kind of "shared memory" the agents use. The model lives up to its

general purpose as it in its basic form only consist of Figure 5 and Figure

6. Also the model scores points on its clarity and

clear division of the separate components. The transition rules are also

comprehendible as they consist solely of if-then rules. This however demands

that you are willing to observe (or imagine) a human in the possible situations

that your agents could get into. As mentioned in the survey of the Adam model

the designer has to equip the agent with explicit variables to what things he

can learn. This could of course be implemented as some kind of learning algorithm

in a more complex environment. If

In the previous subsection 2.4.1 I gave a description of Frijda's view on emotions. In this part of the text I look closer at one of his approaches to making an agent model, namely the Will architecture . This will also include some clarifying of Frijda's views on appraisal, action readiness, and concerns will be introduced.

To understand how emotions work in a human Frijda builds up a model with a number of key properties that are listed in the paragraphs below [35].

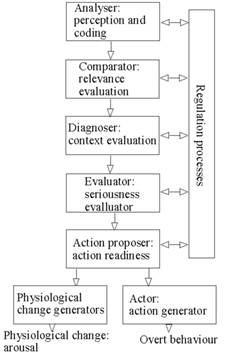

Firstly I present Frijda's more general model aimed at emotions in humans. This model is illustrated in Figure 7. It seems as a straightforward process with input entering at the top and resulting behaviour at the bottom of the figure but on the way there are some internal workings.

Figure 7 , the general model.

When input enters it is perceived and interpreted to some internal form, then send to the Comparator. All events are compared to the agent's emotional state.

If the events are relevant they go on to the next component, the Diagnoser that learns from the event compared to the current emotions. If anger is often followed by pain, perhaps the angry emotions need to be regulated.

Then the Evaluator determines urgency, difficulty and other factors that may influence the attention. This refers to the involuntary nature of emotions as they may be difficult to control or hide.

The Action proposer determines action readiness of the agent. Action readiness is here defined in the same manner as in Frijda's emotional theory (see 2.4.1.1), as readying the agent's body for doing the actions that are in the plan for reaching a desired state.

All these components linearly placed below each other are influenced by the Regulator and may influence it in return. The agent is capable of responding to action readiness and thus may inhibit the prepared action.

Lastly the actions result in physical behaviour and also psychological changes. In this way the agent influences what new events will occur.

The agent in this simple model has the concerns to be active, curious, secure, and survive, and these are the elements by which the agent will judge events. The Will architecture [16] is based on this model but is focused at the agent as a whole. It evolves around more broadly defined concerns that I describe next.

David Moffat and Nico Frijda have made and implemented a model based the above architecture. This is the Will model [16]. They have designed an open but simple architecture from the idea that things should be as simple as possible. They call this Occam's Design Principle:

Occam's Design Principle: when presented with a design choice, always opt for the simplest structure that will do the job, be aware that every convenient design "feature" bears a theoretical cost, and never assume more than you have to. In short, one should "design" as little as possible.

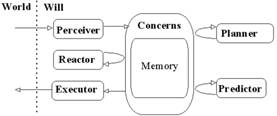

As a consequence of this principle the Will model is build up of modules each representing an area of the agents mind. These modules have very clear purposes as for instance "get input from the environment" and "predict future events". The modules are isolated in-between so no direct communication may occur among them. The only thing inside the agent that modules are connected to is the concerns.

The basis of the Will model is concerns, what is relevant and what is not. That are goals such as strive for food, for security, and dominance of others. At all times the individual will try to fulfil these goals according to the environment, experience and cognition. Thus when a concern is not satisfied it will generate motives that may lead to actions. In effect concerns are everything the individual "cares about", not just basic physical needs but also social relationships, politics, future scenarios, and so on. Concerns are described as "representations of desired states" [35]. Hunger is a physical need and the individual might begin to feel loss of strength when hunger sets in. In this case it makes good sense to seek food to get out of the bad situation. On the other hand some concerns do not have this linear relationship with the needs. For instance up to an election individuals have high concerns about politics and they will discuss and agitate to change the future outcome of the election. This happens even when people are content with the actual political situation and feel no actual misfortune. In this way future predicted events regulate the concerns. As Frijda describes the satisfaction of concerns: "the effect of events for achieving or maintaining the satisfaction states of concerns and, in addition, the anticipated, expected effect of events, the signalled or probable opportunity for such satisfaction, or the signalled or probable thread to it" .

The basic needs, hunger, thirst and the like, are the most fundamental for an individual and they determine what "role in life" the person has.

When an event occurs that has relevance according to some concern a signal is emitted and a goal is generated. The signal represents "concern relevance" and it interrupts other processes so that attention may be directed towards the new event. In contrast to concerns that are only activated when there is a problem, goals endure until they are achieved.

As an example, look at an individual whose body-temperature should be within a certain interval. He thus has a concern that governs this interest. At some point the individual gets too cold. The concern is awaken and produces a goal to raise body-temperature. Then the planning part of the brain might create a sub-goal to put on a coat.

As the goals with the highest change or weight as I shall refer to it, have the greatest influence on behaviour an individual may get stuck with one action if it has highest weight but does not fulfil the concern. For instance is an agent feels hunger one food source might seem better than the other. Then the agent chooses to exploit this food source but unfortunately it is already empty. The food source is of course not attractive any more but the goal of eating it still persists in the agent's memory (see below). Frijda's solution is the Auto-Boredom principle: if a goal is not re-created by an event it will decrement its weight. In this way the agent will slowly get bored with trying to eat something that does not exist and other food sources will after some time become more attractive to the agent.

In the architecture the concerns are layered around the memory as shown in Figure 8. It may not just be observed events that need to be matched against the concerns but also events in memory. In this way predicted or planned events have the ability to change even after they have been generated because mismatch with the concerns may create new goals and result in new plans or predictions. Also a plan that has been created might show to be worse than previously thought. This is because of the modular structure of the agent (see Figure 8): one part of the brain may see it as a good idea to go inside when the agent is freezing but the predicting component might realise that the house is about to blow up and thus change the plan.

Figure 8 , the Will architecture.

The structure of the memory is not precisely defined in the Will-architecture. Its purpose is to extend the agent so it may take action due to other factors than just its current input. Memory holds representations of observed past events and also predicted future ones. Goals are also a kind of predicted event: it is what the agent wishes should happen. In this light it makes good sense to represent the goals in the memory. In this way the agent may have multiple goals that are independent of each other. In Figure 8 the concerns are drawn as a layer around the memory. This should illustrate that no data may enter or leave the memory of the agent without being matched against the concerns. In practice this means that all goals are weighted by relevance automatically.

Connected to the concerns are the modules that each represents a part of the agent-mind. In practice the model works with all modules running in (some kind of) parallel order. Every module constantly grabs the information is needs and evaluates it internally. As a result they write to the shared memory new data, plans or indirect communication with other modules. Because the modules are separated the model still holds if some are removed or new are added, the agent's mind then is either narrowed or enhanced. For instance, if the agent has no perception his mind still works perfectly, however he does not receive any input on the environment. The architecture is thus very robust and this is the primary reason why I use it in my project.

The Will architecture has many of the same components as seen in Figure 7. Events in the environment are perceived by a perceiving unit which then interprets the data and writes it to the shared memory. This module is called the perceiver. In Frijda's model the perceiver only has a one-way connection to the memory, so it may change but not be changed by the present memory.

As explained above (3.2.2.1) the perceived data is matched against the concerns

before it enters the memory just as in the basic model in Figure 7. The other modules then have their chance to change

or absorb the data before (or meanwhile) the executor reads it. As the perceiver, the executer is essential for

the agent to interact with the environment. The executer-module controls the

agent's output to the environment such as motor-functions and even physical

arousal. It should be compared to the actor in the

To fulfil some concerns a single action is seldom enough and it also seems clumsy if the executor has to make cognitive decisions on the sequence of actions. To fill this gab Moffat and Frijda suggest a planning module that handles these cognitive activities. The planner will read and structure the different goals that other modules have placed in the memory. It might also make sub-goals that are the result of reasoning. For instance, if the agent possesses a goal to walk to a specific destination the planner might supply it with a sub-goal to walk around a known obstacle. This would of course require that the executor chooses the sub-goal ahead of the main goal. This could be done by either boosting the sub-goal's weight or by letting the planner keep track of what goals to remove and insert into the memory. The solution does not lie implicit in the Will architecture but my solution is described later (5.2.2.1.4). In a human-like agent planning might seem essential but to model Popperian creatures (as Dennett defines them, see ) planning is not needed. As long as the agent is able to choose the better of two solutions it qualifies as a Popperian creature. Because of the central role of concerns this is always true for a Will-agent .

Another important module in the Will-architecture is the predictor. The purpose of the predictor is to make assumptions based on the context of the memory. The predictor "is necessary for the agent to be able to understand more about its situation than is immediately evident from sensors" [16]. It thus predicts problems before they arise and then accordingly influences the goals to prepare for them, or avoid them if possible.

The reactor implements direct stimulus-response relationships. In opposition to the planner and predictor this module is not a part of cognition, in fact some theorists believe that such a module should go directly to the executor-module. Moffat and Frijda in this case distinguish between reactions and reflexes. A reflex is simply a stimulus-response wiring where reactions are more intelligent. In the Will-model planned actions and simple reactions are judged in the same way. If a planned action is "heavier" than a natural reaction it wins and the reaction will not show or only be a minor secondary action according to how the executer is implemented. On the other hand, this also means that other modules may read and change the memory set by the reactor. If the natural reaction is to burp after drinking a soda, another module might quickly lift a hand to the mouth to hide the sound or even inhibit the burp if it reacts quickly. That could not have been the case if the reactor was connected directly to the executor. Then the agent would first have to hear the burp before he could counter-react.

Many other modules may be added. As the modules are not inter-connected and they all communicate through the much generalised memory no changes in existing modules have to be made in order to add a new one. One such module could be an implementation of emotions or communication. My modules are described in 5.2.2.

Lastly computation time is of great significance in the model. The cognitive processes should take longer time than simple reactions. If this is not the case, the agent would very seldom react instinctively to events because he would have computed an inhibiting countermove even before the reaction could take place. Also if predicting events are as fast as planning we would never see an agent that changes his mind half way through an action (except if new events occur). Moffat and Frijda give the impression that this will happen automatically because the cognitive processes are more complex than the reactional ones and the modules run in a parallel mode. This might be true on an old computer with the simple model suggested so far but with an extended model on a new computer this does not seem likely. For one thing an emotion-module would be at least as complex as the planner. Another thing is that even if the cognitive processes do finish after the reactional ones, on a new computer this would only be a fraction of a second and in practice have no significance. Some kind of delaying mechanism seems reasonable if we are to simulate reaction times in such simple agents as Will.

Today every scientist group, if not every

scientist, have their own agent model. It is very difficult to pick out some

few as representative, but I believe the two described above (

In her book "Affective Computing" [43] Rosalind Picard investigates how emotional thinking and computer science could be combined. However she does not present an agent model but has some methods and points on how to make emotional computers. Picard uses the term affect and emotions interchangeably, so her book title should be understood as "computing emotions".

Picard argues that emotions are an important part of intelligence as they help decide in ambiguous situations. A person who suffers from brain damage that inhibits his emotions would be expected to work just fine: he would always choose the most rational solution to problems and never get carried away in some irrelevant direction of thought. The reality however is different: when faced with a decision that has to be made the person begins a complex search of all possible solutions. That applies even in the simplest cases of decision making. Normal factors, such as embarrassment of keeping the other parties waiting that would rush an individual to a conclusion does not affect the brain damaged person at all. This affects his social relationships and therefore degenerate his ability to survive if we think in the Darwinian sense. More importantly the brain damaged individuals have great difficulty learning from their mistakes and they have great difficulty judging danger towards difficulty. In all Picard concludes that emotions are not just side-effects of the cognitive functions and certainly not a drawback for humans. She believes that the emotions are a fundamental part of how we as humans work and solve complex problems.

When this view on emotions is put into computer science it seems logical that computers would gain much from possessing some kind of emotions. Instead of continuously searching through all possible solutions, emotions could both guide the computations based on the current state and help determine what level of detail is necessary. Picard suggests that emotions in computers could be modelled with rules like those proposed by Ortony et al. (see 2.4.3), and combined with formal rule-based logic. To model such kinds of emotions first of all some input is needed. If the computer should be able to read other computers emotions, or even human emotions, external sensors have to be made. Picard sees that humans are limited by their sensory data to how we interpret emotions. We see, hear and feel and thus categorise emotions from these data but a computer could (and should according to Picard) extend these sensory inputs to include infrared vision, heart beat and sweat indicators, and any possible external device that may supply information of the users state. This would lead to categorisation of new kinds of emotions that humans cannot perceive.

However, when a human has to read the mind of a computer or two computers communicate it is also important to include emotional information. Compared to speech and gestures, emails and human-computer-interface today is completely inferior in transferring emotional data. For computers to be able to express emotions Picard has six suggestions of things that computers should be able to:

When considering believable agents these features seem a good guidance. In the case of agents we are dealing with program-to-program relationships but according to Picard the situation is equal whether it is human-computer-, computer-computer-, or program-program-interaction.

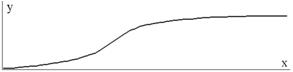

Picard also discusses some more practical features of modelling emotions. One thing is the structure of emotions but also the emotional intensity has importance. Picard's theory is that the intensity of emotions works in the same way as tones from a bell. At first they decay fast but then the curve seems to level as illustrated in Figure 9. If the bell (or emotion) is struck several times the tones are commutative as thus makes a higher sound (or more intense emotion). Hit the bell too hard and it will break, as will emotions if they are presented with too extreme input.

Equation 1 determines the output:

![]()

Equation 1, explained in the text below.

Figure 9 , the intensity of emotions. This is a neutral mood.

Here y is the output, the intensity of the emotion, and x the input, which represent the possible kinds of stimuli that trigger the emotion. The values y and x move the response curve up/down, left/right respectively. The variable s determines the steepness of the curve, how fast it will decrease. The variable g controls the overall amplitude of the curve. This value may be coupled to the arousal level of the system.

The mood may be due to the value of x : a high value pushes the curve to the left and should thus be interpreted as a need for less intense stimuli before the emotion is felt. A high value of x should then be compared to a good mood.

The value of y should be interpreted as a cognitive prediction of the outcome. This ought to model that a predicted positive outcome produces less positive output than a non-predicted one. In reverse this implies the effect of surprise when something unexpected happens.

Velásquez presents a model of how the

dynamic nature of different affective phenomena influences behaviour in agents . Such affective phenomena could be emotions, moods,

and temperaments. The presented model is called Cathexis which should mean

"concentration of emotional energy on an object or idea" (I don't get the connection). As I do, Velásques

tries to include different fields of science. Those are psychology, ethnology

and neuroscience. Cathexis is a very different approach to an agent

architecture compared to

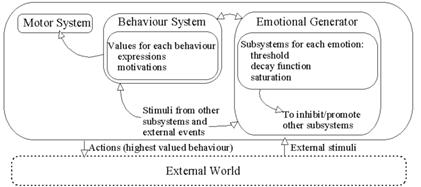

Figure 10 , the Cathexis architecture.

Figure 10 shows the architecture. The boxes are meant to represent subsystems of the agent that run automatically. The arrows show in which direction information (or stimuli) may flow. In the next I describe how each subsystem works and their connection.

The dynamic of emotions, moods and temperament are based on a functionalistic idea, that if we copy the processes of how emotions work in humans we will get an agent with real emotions [33]. The part of the agent in Figure 10 that is called Emotional Generation is a network build up of many subsystems that each represents an emotional family such as fear or disgust. Within each of these subsystems sensors monitor both external events and internal stimuli to determine when to elicit its represented emotion.

There are four different sensors that describe the different ways in which an emotion may be activated. Each subsystem has two threshold values: one that controls the activation of the emotion and one that determines the intensity. The subsystems also have a delay function that determines how long the emotion will last once it has been activated. The subsystems are also linked to each other so that they may inhibit or promote each other.

Velásquez looks at both cognitive and non-cognitive sensors that elicit emotions.

As Frijda suggests (see 2.4.1.1.1), Velásquez works with blends of emotions as more subsystems are allowed to emit emotion at the same time. It is then up to the behavioural subsystem how to integrate these different goals.

The behaviour system decides which behaviours are appropriate to display given the current emotional state. The behaviour system includes a predetermined set of actions such as "kiss", "fight", or "smile". Each behaviour has a value that may be considered an action readiness (see 2.4.1.1). The behaviours then compete with each other to be displayed by comparing their values and the highest one determines what actions are executed. The values are constantly updated according to two components:

The value of a kind of behaviour is then the result of how well its motivation- and expression-components fit the desired emotions. The behaviour with the highest value then determines the active action.

Velásquez claims that the model can be used for almost any kind of agent: in computer games, education, human-computer interfaces, etc. To illustrate this he has made an artificial baby-alarm which is in fact a baby-like agent. The baby has a small set of behaviours such as sleep, eat, and drink. The emotional generator has some basic drives, such as hunger and fatigue and some emotional categories as surprise and disgust. The user may interact with the baby by changing the environment. That could be to turn off the light or feeding the baby. The user is also allowed to modify the internal parameters of the baby directly to experiment with the model. As a feedback the baby has six different facial expressions that are displayed modally as an overall interpretation of its state. These expressions simply represent each their emotion and the highest charged emotion wins. This is in contrast to Picard's idea that emotions tend to shift to another similar emotion thus making emotional transitions more smooth (see 3.3.1).

The strength of the Cathexis model lies in the simplicity of the internal state. The agent has a very limited number of behaviours and emotions and these internally have no more parameters than could be shown on a computer screen. The model is therefore well suited to make simulations like the baby-agent where the point is to experiment with the parameters.

The drawback of the model is the non-general architecture. Every new kind of behaviour has to be pre-designed because they are described at a fairly high level. Compared to the Will-architecture where the executer is meant to combine behaviours and then come up with an action, it seems that the Cathexis model was meant to have a one-to-one relationship between behaviours and specific motor-actions. Further, the Cathexis agent model is made solely with the purpose of modelling emotional effect on behaviour. An agent of this kind does not possess any learning mechanisms and planning is not a part of the basic model. If these things had to be included, if the baby should develop itself, it is not clear how such improvements could be made.

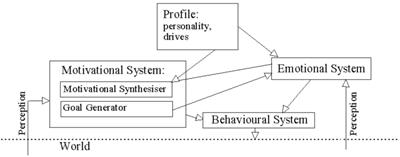

In contrast to Velásquez' Cathexis architecture that does not include planning or direct agent-environment interaction, Chen et al. suggest a model focused on the actual actions performed by a human-like agent with both mind and body [30]. As the Cathexis model described in the subsection above, this model is build up of two cognitive systems: the motivational and the emotional system. Besides this there are the agent's general data such as personality and what drives he has. The agent also has a behavioural system that combines emotional and motivated behaviours into specific actions.

The emotional system influences almost every part of the agent: what it does, how it is done, bodily posture and facial expressions. However it does not take initiative to perform action itself, it only nuances motor-functions and motives generated in the motivational system. In this survey I will focus on the motivational system as there is nothing new in the approach towards emotions.

Figure 11 , Chen et al.'s mind model.

The motivational system consists of two subsystems: the motivational synthesiser and the goal generator. In the goal generator motivations are mapped to the corresponding goals. A goal is here defined as usually: "A goal can be conceptualized as a representation of a possible state-of-affair towards which an agent has a motivation to realize" [30]. The arrow in the figure from the goal generator to the emotional system illustrates that the goals are also judged emotionally. The goal is also input to the behavioural system that brings the agent to action.

The agent has a motivational profile that describes what kinds of behaviours he currently sees as most attractive. These motivational categories are: physiological, safety, affiliation (social activities), achievement (achieve goals), and self-actualisation (learning, creativity). These motives work as "boosters" to behaviours so that the agent is more likely to display some kinds of behaviours instead of others. The agent thus has a simple personality based on his motivational profile. -in Figure 11 this profile is present in the top box.

Only the strongest motivation has influence on the agent's behaviour. The agent does not possess memory of previous motivations, nor predicted ones, as in the Will-model. Opportunistic sub-goals or planned sub-goals do not exist as such in the model. A sub-goal arises because they have higher motivational weight than the current goal. Then the current goal would be replaced by the sub-goal and not just delayed as we would normally think of a sub-goal. The idea is then that the original goal still has the highest motivation once the sub-goal is fulfilled and the agent therefore chooses to continue where he left. This only works if the model is implemented in a completely deterministic matter and if the environment is not too complex. Take for instance an agent that is as tired as he is hungry. To find his way to the diner he needs a whole series of sub-goals and equally many to reach the sleeping quarters. The agent would then probably die of insomnia and hunger because every time he fulfils a sub-goal and nears one location he might just as well choose to find the way to the other.

Another problem is that oscillations can easily occur: as soon as the agent begins to fulfil one goal, another goal may become more attractive and thus the agent would change goal all the time. This is a problem all agent-designers are faced with. Frijda for instance suggests the Auto-Boredom principle (see 3.2.2.2) and Velásquez just hopes it does not happen (above). Chen et al. solve the problem by keeping track of the original motivation when a goal-satisfying action begins. As the action result in a decrease of motivation the agent still compares to the original motivation when determining what goal to proceed. Therefore an agent keeps on doing what he is doing until finished or some other goal has a higher motivational weight than the current goal had at its highest.

The agent model made by Chen et al. is interesting compared to the previous models I have described because of the work with personality and oscillation avoidance. Chen et al. have also made an agent-architecture to model a complete agent in an environment and not just the mind.

The approach to include personality in the agent is very simple and nice. The idea could be generalised to fit into other agent models that have some kind of predetermined behaviours or goals. Personality would then be a simple schema of which kinds of actions, motivations or goals should be boosted. In the Will-architecture [16] personality could be a list of the predetermined concerns (hunger, thirst etc.) and a value by which to multiply them. Chen et al. gives the impression that personality-values should change overtime.

The problem with the previously described Auto-Boredom principle is to decide how long before an agent gets bored with something. Chen et al. have another approach to this as they let the agent complete his task if nothing gets more important than the tasks initial motivation. This is perhaps a bit simpleminded but it is a technically simple solution to a difficult problem (see 5.2.2.1.4.2).

|