Even though much research today is concerned with modelling human-like agents it is still a fairly undiscovered country in computer science. Therefore it seems reasonable to take a peek view into other fields of science to find a broader perspective. In psychology for instance the study of emotions has been on the way in the last three decades. Though these studies are far from complete, psychologists have made models that show to be very plausible in computer science regime. Emotions are just one part of theories on the human mind that I wish to investigate. The other areas are mind and intelligence, two terms that would need some more elaboration:

By mind I refer the self-image humans possess. That is to be aware or conscious, as I will refer to it. My approach to this is through neuroscience (Edelman) and philosophy (Dennett). This is the thing I look into in the first part of this chapter.

Intelligence is the cognitive functions in the brain that produces thought, planning, and so on. Intelligence does not necessarily mean mind. For instance, insects show intelligence but not thought. Then intelligence is how well an individual solves the problems it encounters as it tries to fulfil a goal.

I believe the theories described in this chapter give a good overview of how other fields of science address the human brain and its workings. -But of course these are only a small selected fraction of the theories and materials available. The criterion for selecting has been recognised importance in the field of science and relevance to my computer-minded ideas. Dennett for instance does not suggest a specific model for artificial intelligence but is a major contributor to philosophy. On the other hand, Clore and Gasper, discussed in the "Emotions" part, do not set the scene in psychology but they have some really nice ideas seen through the eyes of a computer scientist[1].

The sensation of being alive, knowing ones owns existence is a fascinating and an important part of the human brain. As individuals we are not able to detect anything outsides the grasp of our mind. We all have our own subjective images of how things are as the brain receives sensory signals from the senses and then internally interpret them. Is blue really blue to all people or the scarier question: am I the only conscious being there exist? This latter idea is the existence of what Edelman [23] calls zombies, people that act exactly as is they where conscious but in fact are not. -We would never know.

With consciousness in a central role in life of human beings it seems a must to investigate it further in my study of human-like artificial intelligence. It is not the purpose to find answers to the philosophic questions I proposed but to encircle if and what impact consciousness has on behaviour and how the brain supports this.

I have already numerous times referred to the brain as the home of consciousness and that is a working thesis through out this project. It seems that theories of soul and other religious-like ideas do not match my interest in computer-science where I like things to be as concrete as possible. The scientists referred to in this part, Edelman and Dennett, both have this view ([23], ).

The definition of consciousness is often implicit but very different. Minsky wrote in his book Society of Mind: "as far as I'm concerned, the so-called problem of body and mind does not hold any mystery: Minds are simply what the brains do" [33]. This is an example of an extremely narrow definition of consciousness where the attention is restricted to the ability to sense what is happening within and outside the individual. From this point of view he concludes that humans are not very conscious and in fact machines are already potentially more conscious than humans. On the other hand if you look in a dictionary you will find several distinct explanations of what consciousness is. Those are for instance awareness, all the ideas, thoughts and feelings of a person, or being physically active. The last might include houseflies: a hit will make them unconscious for some time but they might recover consciousness and fly on. Neither Edelman nor Dennett are very good at specifying what they mean by consciousness but as they focus on better-defined particular mental states I believe their ideas are clear. I dare not give a precise definition of consciousness, as I am a stranger in this land looking for some inspiration. However O'Rourke claims that many AI researchers have a mind-body theory known as functionalism: if the functional roles of aspects of mentality are reproduced, consciousness necessarily emerges. This means that any system that models the functional structure of the brain automatically possesses all the properties of the mind -including consciousness. Functionalism is a quite dramatic view, take for instance the functionalistic theory of life: "any system that adapts to its environment, metabolises energy, and reproduces, is a form of life" .

According to Gerald M. Edelman, neuroscientist, it is not sufficient to describe consciousness solely by its functions, as he and Giulio Tononi claim in the book, A Universe of Consciousness [23]. To find the root of consciousness one has to look into the details of how the brain works as a machine. He believes that the mind is a special kind of process that depends on special arrangements of matter. That means first of all that the mind is a process and that the mind is "just" based on a special combination of matter.

In his book, Bright Air, Brilliant Fire [24], Edelman tries to make a connection between what is known about the mind and what neuroscience has taught us about the brain. This book and the more recent, A Universe of Consciousness, largely covers the same subjects and I will mix the contents of the two in the following .

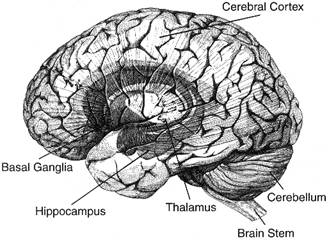

To start with neuroscience the brain is first of all an extremely complicated structure but the material itself is not a special substance. The brain is organised into the areas shown in Figure 1, where the largest area is the cortex consisting of nerve cells.

Figure 1 , the anatomy of the brain ([23], p38).

In a human there are about 200 different basic types of cells and one of those is the neurone, or nerve cell. The neurone is different from other cells as it has a variable shape, special electrical and chemical abilities, and the way in which the neurones in between are connected. The neurones receive connections from other neurones at sites called synapses. The number of neurones and possible connections are more than astronomic. Edelman's idea is that with a "machine" as complicated as the human brain it is reasonable that a thing such as consciousness can arise.

The physiologic organisation of the brain is strongly connected to the structure of the inner skull. This means that the brain change structure as the head grows and more or less randomly folds itself into place. Also some structures depend on the death of neurones to form. The point is that the brain is not organised by some finite blueprint in the genes, it is more a partial stochastic physiologic "incident" that leads to how the brain is organised. Even though the connectivity of neurones is more or less similar from person to person because of these stochastic properties no one has exactly the same brain structure. Not even identical twins or clones would have the same structure of neurones and connections.

The brain is an example of a self-organising system. In a certain respect it looks like a man-made electrical system but at a microscopic level it shows that point-to-point wiring, as in our electric devices, cannot in the brain occur because the system simply varies too much. This is for Edelman the prime reason that the brain is not a computer. Secondly he argues that the kinds of signals we could think of in a computer has not been found in the brain. Those are coded signals, which in them have some meaning. He also argues that neurones do not carry information as some electronic device caries information, though research is far from complete in this area.

In his later work

Edelman suggests a model that describes how

the brain works. He calls this the theory

of neuronal group selection, TNGS. This is an approach that takes

Population thinking is the idea that variation among individuals of a species is the key property of natural selection that eventually leads to the origins of the species. That is in other words to view the random diversities and mutations among individuals as a wonderful and necessary property in evolution. This should be seen in contrast to descriptions of diversities as nature's error and fields of science and theories that do not consider randomness. An example of the first is the fields of physics that do not need stochastic elements to explain for example how galaxies form. An example of the latter, are naturalists that describe the workings of mind and evolution as a deterministic computer system (see Minsky [33]).

During the development of the brain, neurones branch connections out in almost any direction. All this generates a very varied connection pattern from individual to individual. Then the neurones strengthen and weaken their connections according to their individual electric properties as described previously and the result is that neurones with similar electrical patterns tend to have stronger connections. In other words, neurones with similar properties are grouped by stronger connections. These are the neural groups Edelman refers to in the name of his theory.

In TNGS there are three principals on how the anatomy of the brain is set up. The first two explain what is meant by neural group selection and the third principle is where Edelman introduces the concept of consciousness.

At the early stages of development anatomy of the brain is constrained by genes and inheritance. As the embryo matures connectivity of the synapses evolve as a result of selection due to the bodily changes. This kind of internal selection that occurs on fitness towards the change of physiology in the individual is called somatic selection. It is a reference to natural selection where individuals are selected due to their fitness according 616k1017g to environmental changes. As the unit of selection in natural selection is the individual, in somatic selection the unit of selection is a neural group, as described above, and not the neurone itself. The explanation for this is that no neurone alone shows the properties that are found in a group: a neurone may either inhibit other neurones or excite them -a group may do both.

Certain synapses within and between groups of neurons may change, that is strengthened or weaken without changes in the anatomy. These changes occur due to stimuli from outsides the brain such as feeling or vision. Because these stimuli are results partly of the environment and behaviour this may be seen as an evaluation function of how well the individual's behaviour works with the environment.

A recursive structure of input allows the different parts of the brain to interact in a loop of signals. Edelman calls this re-entry. Re-entry is the thing that allows an individual to partition an unlabeled world into objects and events without any "software" specifying how to categorise these things. As the first two tenets provide the bases for variance and diversity of states that would be needed for a complex system able to contain consciousness, re-entry is the key to combining these states. Re-entry underlies how brain areas co-ordinate with each other to emerge new functions and thus is the basis for the bridge between physiology and psychology.

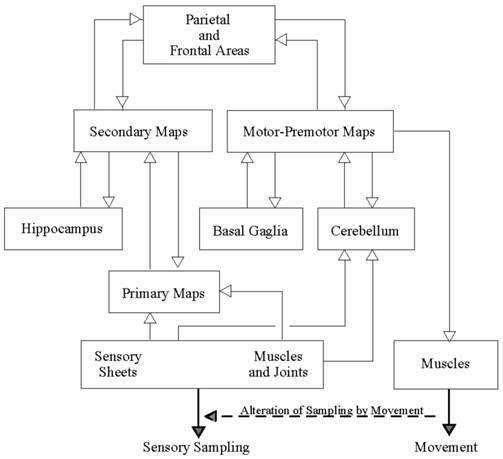

Figure 2 Edelman's diagram of global mapping.

Figure 2 shows Edelman's model or how he sees global mapping as he refers to it. Global mapping is the structure that takes care of perceptual categorisation and control of movement. The global map is a dynamic structure containing local maps. These local maps are where sensory input enters the brain so for instance a hand is connected to a specific region of the brain where the palm represents one parts of this region, each finger their part and so on. Such local mappings take place in the cortex (see Figure 2 above). The non-mapped regions (such as the brain stem, see Figure 1 above) are then connected to the local maps in the global mapping. The whole structure of the global map reflects that perception generally depends on and leads to actions as the two input/outputs are sensors and muscles respectively. When an individual reacts on sensory data the resulting movement would again change the sensor input. In this way the global mapping is a dynamic structure that changes with time and behaviour.

In Figure 2 the fact that arrows from the local maps go both ways is what causes re-entry. It is important to note that re-entry is not a feedback loop; feedback occurs in a single loop where previous information is used for control and correction. In contrast re-entry occurs over multiple paths with no pre-specified information. The result of re-entry is to account for the ability to track visual objects based on interaction between brain areas for visual movement and shape.

A last thing I will have to mention on the neurology of the brain is some functions that apparently affect the state of the entire brain. A relatively small number of neurones in the brain stem and hypothalamus (see Figure 1) are known as value systems. These neurones project to a huge portion of the brain and sometimes all of it through a massive set of fibres. The neurones in a value system seem to fire whenever something important is happening. That is when something has interest for the satisfaction of the individuals needs. For example the satisfaction of hunger will activate value systems and lead to the selection of a number of circuits that performs the eat-action. As the neurone connections strengthen and weaken with their usage values implicitly determines learning and adaptation.

As the neural pathways are affected by changes, memory is created as a trace of brain activity. Thus memory is a dynamically generated from the changes in links between different brain circuits. This means both that memory cannot be identified uniquely with any single part of the brain (no "hard-disc") and that it is not a special kind of information. Value systems are not necessary to create a memory but the probability of memory increases with the activity of a value system.

The exact purpose of the value systems is unknown but they have extreme importance as target for medicine against mental illnesses. It shows that even small alterations in the pharmacology of these cells can have drastic effect on the global mental function.

The idea is that much behaviour is learned through experience but that values are "hard-wired" as a consequence of evolution.

The memory described is something that does not deliberately try to store information. It is only traces of past usage of neural pathways. Therefore to retrieve the past information, to remember, is a creative act that also lies on imagination to fill out the gaps. For a being to have consciousness it is necessary to possess memory in order to relive past moments and perhaps even foresee future events.

Edelman works with two kinds of consciousness, primary and higher-order.

Primary consciousness is found in animals with human-like brains. These beings are able to construct mental scenes but they have no symbolic or true language capabilities. The idea is that primary consciousness emerged from evolution as new re-entry circuits appeared. These circuits should have made a connection between the processes for perceptual categorisation and memory that is based on value systems.

Higher-order consciousness is only known to exist in humans and is an extension of primary consciousness. It contains self-awareness and the ability to construct both past and future scenes. Further it requires some kind of linguistic abilities. In fact the idea is that higher-order consciousness arose as a consequence of the evolutionary appearance of language. Edelman believes that linguistics also hold the ability to think and communicate by symbolic means. Once higher-order consciousness begins to emerge, the self can be constructed from social and affective relationships. Self-awareness is the key to abstract thought and in its furthest form the individual is conscious of its consciousness.

The suggested brain model is organised in a very ordered and comprehensive way without any vague definitions. This makes it reasonable to assume that a computer model could be made that would simulate some workings of the brain model. Edelman argues that in theory it is possible to model a brain that has consciousness and in order to understand the vast complexity of the human brain it might be necessary to use synthesising artefacts. Edelman himself has implemented the brain model in his series of Darwin-robots. These are all robots that try to recognise stimuli in the sense that they build up categorisations of what they experience. This is interesting because the real world (and the world of the Darwin-robots) do not have labels attached to objects.

Anyone who wants to make a computer model of the human mind must at least consider how categorisation should occur and if the aim is a true (and not just believable) model perhaps Edelman's ideas are the best solution. The results of the Darwin-robots show that classification is possible due to behavioural experience and not prior programming. It is though necessary to give the robot some priorities such as light is better than darkness, or touch is better than no touch. Some people see such global mapping approaches as the future in neural network research (see [52]) but still the implemented models have been fairly simple.

Edelman presents a complex theory on the

mind and consciousness and in this part of the text I have sketched just a

fraction of his ideas. I have given a survey of the neuroscience and physiology

of the human brain in order to introduce Edelman's theory on neural group

selection. This is a theory that follows

Daniel C. Dennett has written several philosophic books and articles on the subject of consciousness and mind. In this section I will dig into two of these books, Consciousness Explained [13] and Kinds of Mind in order to give an overview of his ideas. In contrast to Edelman's neuroscience, Dennett's ideas are often simple and sometimes too superficial according to critics (see ). I find his ideas a good counterbalance to Edelman though he still is a functionalist in some respects (I return to this). He draws on psychology, neuroscience, and computer science and in that sense he supplies as a good representative for a mind view that eventually could lead to or support computer models.

In his book, Consciousness Explained , Dennett's aim is clearly to explain consciousness. His argument is ongoing through out the book and perhaps a bit vague as a whole but along the way he builds up many interesting theories that I will try to do justice in the next.

I mentioned that Dennett is a kind of functionalist (explained in 2.2.1). He does not believe that the human and the brain are just machines exactly because of consciousness. He claims that even if a machine was made that could mimic the exact behaviour of for instance wine tasting and give a clever output, the machine would not enjoy the wine as most humans would. On the other hand he disclaims the soul or other Descartes-like mind-body dualism. To Dennett a wine tasting machine is a zombie in the same sense a Edelman uses it ; the actor has no insight into what it is doing -it is simply acting in this way because it is built in this way. So Dennett has placed all his money on explaining consciousness without any miracles, as a unique feature that appears in some things and not in other even though they from the eyes of a functionalist like Minsky seem alike.

The kind of functionalism Dennett works with he calls teleofunctionalism from "teleology" which is the study of evidence for design in nature. This is to emphasise its grounding in evolutionarily advantageous functions.

When you imagine something it comes into existence according to Dennett, but of course not as a real physical thing. Anyway the electric patterns of your brain will confirm that the thing was definitely perceived. Thus the thing has existed and it must have been rendered in some undetectable mind stuff. As other brain activity normally just happens without you being aware of it, conscious events are by definition witnessed. But the mind is not just where things are witnessed, it is where appreciation happens. This it what does that things matter and for instance enjoying the wine can occur. Therefore beings without consciousness should not stand trial for the things they do: animals should not be interpreted by moral values and sleepwalkers who do bad things should not be punished because even though their body and brain does harm the person is not conscious about what he does. The point is that neither animal nor sleepwalker does anything by intention. Their actions are just things that happen in contrast to the conscious doing.

Mind stuff is then the thing that elevates the brain into consciousness. In summary it has the four abilities:

It is the medium where imagination is rendered.

It is the self-image, a thinking thing that is able to refer externally and internally to oneself. It is aware of the physical context of the world and is also able to look into and work with the brain functions.

It is the source of mattering, the reason why we can enjoy wine, hate meatballs, or love ice cream.

It is the home of intentionally and why we act with moral responsibility.

All mental activity consists of intertwining parallel threads of processes of interpretation and elaboration. The input that enters the head is constantly "edited" to present a more coherent picture of the sensory data to the consciousness. That is for instance why we "see" steady pictures through our eyes, as they are actually flickering about and the heads moves around. The point is that we do not experience the direct sensory input but the effects of some editorial processes.

Perhaps the strongest point in the Multiple Drafts Model is that feature detection is only done once. He claims (much like Edelman) that there is no need to re-represent data in some conscious centre of the brain. This is opposing to the traditional theory of mind-body dualism Dennett call the Cartesian Theatre (see also [46]).

Sensory input that are edited and received in some part of the brain is not necessarily a part of the conscious experience. This information is referred to as the narrative contents, as it is edited material in the brain that is continuously re-edited and combined with new inputs. At any time there are multiple "drafts" of narrative contents at various places in the brain that may all selectively contribute to consciousness. Thus Dennett does not believe that consciousness is the result of a single stream of narrative contents.

Some few people suffer from "blindsight". It is a condition where people have a distinct blind spot in the centre of their vision due to damage on their visual cortex. Experiments however show that these people can guess when a visual stimulus is presented in their blind spot, despite having no conscious experience of the object. Any slight information, that could be a shadow, works as a probe into the person's stream of consciousness. This would not be a cognitive reasoning but with time just another way of getting the otherwise missing input from the blind spot. Another example is people that have had their left and right parts of the brain separated surgically. When they are asked to describe an object they cannot see but touch they are not able to describe what they feel because finger feeling and speech are in each their half of the brain. The result is that these people develop a method to for instance prick themselves with the object so the veritable brain half can pick this up to describe whether it is a pointy thing or even guess what it is. According to Dennett this means that with time and training people with blind spots, or separated brain halves develop new ideas of consciousness.

Some things are continuous like gravity and basic premises to sustain life. Such things have been dealt with through evolution in the sense that solutions are hard-wired into our organisms. That is for instance the sense of up and down or the mechanisms that organisms have to protect body water. Other things in nature change in cycles such as the seasons. The evolutionary solution here is also often hard-wired as some animals develop winter coats and some birds get the urge to fly south. Other changes in the environment are not predicable as they arise from chaotic systems (see Flake [21]). Towards such changes organisms that are able to change themselves have the advantage in evolutionary terms. These are individuals that are able to learn new behaviours and new behaviour patterns and so they have an advantage over creatures that have to "learn" through time consuming evolution.

Dennett suggests that a mechanism known as the

The idea of language is to share information. Then language must have developed at some point of evolution where people have been able to adopt more abstract information into ones own cognitive processes. To have language is an obvious advantage in evolutionary sense, but as Dennett claims, language was not hardwired at first, it had to be learned. For individuals to do this there must have been clear benefits in language for the people to see. Dennett believes that language is a way to enhance sensory input in the way that when your brain is limited in dealing with a situation, a request for help might respond in new ideas feed back from the environment (another person that knows how to deal with the situation). Perhaps even a half solution to the problem might trigger new neural pathways in the brain that brings the requesting individual to figure out a solution himself. Finally just hearing the problem request spoken by oneself might bring about a whole new set of cognitive processes to come up with a solution by own hand. This is the basis for Dennett's theory of how inner speech has evolved. Because of the benefits to individuals that hear their own requests evolution has in time produced an internal connection from speech to ear. Such an internal link allows the individual to have private information but it also possesses some of the drawbacks of the speech-listen link such as linearity and that inner speech is constrained by the complexity of the language. Another example of an external link that has become internal is the eyes and our ability to see inner pictures. This should be compared to people that draw things to themselves to better understand them.

Dennett bases this theory of inner links on experience with the brain's ability to find new solutions to old problems. That is for instance a person with brain damage where other parts the brain begins to take over the work of the damaged parts.

Dennett believes that even though language has become hardwired, consciousness has not. It is on the other hand implemented in the "software" of the brain, which is the reason why neuroscientists, such as Edelman, cannot find it. This "software" is the result of a cultural evolution as proposed by Dawkins in his book The Selfish Gene [40]. The unit of cultural selection is the meme, a unit of self-replicating cultural traits, such as religion, music and education. Dennett sees consciousness as a complex set of memes. Dennett suggests that it is inner speech that led to the consciousness-"software". He sees this "software" as an implemented "Joycean machine" in the parallel architecture of the brain and this machine leads to the stream of consciousness. That language is a precondition before this can happen also means that consciousness is a late development in evolution that many (perhaps all) animals do not posses.

As Dennett tries to explain consciousness in Consciousness Explained [13], he explores the possible definitions of "mind" in his book Kinds of Mind - Towards and Understanding of Consciousness . He also tries to specify how we can know and classify a mind when we encounter one. This is mostly based on the stance, standpoint, we take towards them.

As a philosophical term intentionality is when something's competence is about something else. In this sense a thermostat is an intentional device as it represents both the current temperature and the desired temperature.

To see people, animals, or machines as intentional systems is one of three techniques we use to understand the behaviour of complex systems in everyday life:

The physical stance is one we apply to objects when we predict how the laws of physics will influence them. Things will fall due to gravity; objects in motion tend to keep on moving and so on. Predictions based on the physical stance are often very confident.

The design stance allows us to understand and predict features about a design. It is what allows us to see the large picture of something instead of implementation details. This is the feature the object was designed for and not what it actually is. For instance "the brewer is making coffee" instead of "heated water runs through a filter filled with crushed coffee beans".

The intentional stance is whenever we treat things as if they where rational beings that has chosen its actions by considering its beliefs and desires. The intentional stance is a very robust "tool" as it allows us to make reasonable predictions about very complex systems such as animals and humans. However there are two problems with the intentional stance that makes it risky to use. First of all are we non-privileged observers that have to conclude what the intentions are only from the observed behaviour. Secondly, complex systems are inherently resource-bounded and therefore we can only approximate rationality.

Dennett believes that adopting the intentional stance is not just a good idea, but it is the key to unravelling the mysteries of the mind.

He emphasises that the philosophical term intentionality should not be confused with the common term referring to if someone's actions are intentional or not.

Much behaviour is neither rational nor irrational but simply automatic and without any form of consideration. Such behaviour mostly seem rational as they are characteristics that are designed into the system, such as animals that carry genes from one generation to the other or a chess playing machine. Assuming that things are rational thinking beings gives us a way to approximate predictions of future behaviour. The intentional stance lets us assume that the chess-playing machine is really trying to outsmart us and win the game, then we can predict what kinds of moves it would be likely to make next. Without the intentional stance you would simply be baffled every time a counter move on the chessboard was made -even if you are playing against another human.

Dennett goes through a process of classifying minds to explain how intentionality came about. He calls this "The Tower of Generate-and-Test". The result is a classification of minds at different levels of complexity that I survey next.

What Dennett calls Darwinian creatures are beings that are the product of recombining and mutation of genes. Thus it is the genes that control everything they do and as such they are hardwired like robots from their conception to behave as they do. Creatures with "smart" behaviour survive more often to produce outcome than other creatures. In the long run this leads to the survival of creatures with "smart" genes and thus a kind of evolutionary adaptation to environmental changes.

When learning was introduced by evolution,

creatures with this ability had a whole new means to try out different

behaviours. Dennett calls these Skinnerian creatures after the behaviourist B.

Skinner who theorised that inherited ability to condition behaviour could take

over when the hardwired behaviour was insufficient. As the different available

behaviours are tried out over time, the response from the environment works as

a reinforcement mechanism to strengthen this behaviour. This works as the

Evolution is a time consuming process and learning by experience is straight ahead dangerous. This leads to the extra ability of Popperian creatures: they are able to figure out something before they lead themselves into action. In this way these creatures may predict the outcome of an action and then only select the ones that seem to fulfil the creatures concerns best. The name is after the philosopher K. Popper who first came up with this idea. There are many examples of Popperian creatures as it includes all mammals, birds, fish, and reptiles. All these creatures have the ability to sort off some actions by comparing them to the environment. It all comes down to if the creature is able to choose the better of two solutions to a behavioural problem.

R. Gregory was the first psychologist to theorise on the role of information when creatures develop "smart" behaviour, here from the name of these creatures. The significant thing about Gregorian creatures is their ability to use tools and inventions that other creatures have made or that nature provides. Beavers use rocks to pound shells open and chimpanzees use a stick to catch termites as examples. But not all chimps have learned the skill and this is, according to Dennett, because it does not only require intelligence to design and use a tool. The tool also challenges the user's intelligence as there are now "smarter" behaviours available.

This completes Dennett's four-story tower of creature minds but also language and the ability to modify the environment are kinds of minds that Dennett sketches but does not placed in the hierarchy. Language in Dennett's sense has been explained above. Modifying the environment to make it more suitable for "smart" behaviour is seen in many species besides of course humans. Dogs mark their territory, beavers build dams and ants leave pheromone trails to mark routes to food sources (see [19]). Dennett calls this "prosthetically enhanced minds".

Dennett is not afraid to draw conclusions and he is one of the few philosophers that see the possibilities in AI. This makes his ideas bolder and more comprehensive than most other philosophers, which is a good thing if you, as I, are making a belly landing down through a sphere of different scientific genres.

The intentional stance clarified how humans estimate predictions of the complex environment they perceive. For an artificial intelligent agent the intentional stance sets up new rules for internal structure of its mind: when the agent acts in a way that might be intelligent the observer will overestimate the complexity of the agent's behaviour because of the observer's intentional stance. For believability this means, what cartoon animators have known for a long time that humans tend to "fill-in" the missing behaviour they see in characters.

As emphasised by Clancey [52] it is still to debate if consciousness is a good starting place for understanding cognition. In this part of the text I describe an area of the human mind that has to do with problem solving and in all how well the individual functions in a functionalistic sense. These are the following aspects of intelligence: creativity, attention, learning, and collective intelligence. In the following each is represented as a subsection.

There are also other aspects of intelligence that might have been included such as memory, intuition, and the ability to perform multiple tasks at the same time but the line has been drawn where I have found interesting material that has been included in the implemented system. Memory however is discussed in 5.2.2.1.1.

In the models I shall present later (chapter 3) there is referred to cognition as the thinking part of the brain and the same definition goes for this part on intelligence. To be more precise: the cognitive processes in the brain are those that reason on perceived and stored information. Cognitive processes may be conscious, as with thoughts, but also unconscious.

One definition of intelligence is that it is how well problems are solved. In that light all existing things are intelligent because they have solved the problem of disappearing: an animal is intelligent because it is alive and reproduces, a rock is intelligent because it degenerates very slowly. To this kind of intelligence it is not important how you come about a problem but that you do it. It might seem an unreasonable approach to intelligence but in evolution this is the only thing that matters and therefore it is a part of us. An example of this kind of intelligence that we may experience in the world today is intelligence in insects, swarm intelligence. As described by Bonabeu at al. [19] insects as a group show signs of intelligence that the individual is obviously not capable of. That could be problems as getting food or dealing with a foe. An example of the first is ant-navigation that I have described in a preliminary project . An example of the latter is a bee colony that is intruded by a wasp: the small bees move close to the foe and vibrate. This evolves heat that kills not only the wasp but also many bees. To the individual this does not seem very intelligent, but for the colony it is a solution to surviving.

Creativity is often associated with intelligence. In common language creativity is the ability to construct new situations from the existing ones. Some scientists believe that creativity is a matter of making unusual combinations however Boden [31] has a more distinctive and precise definition: to be creative it is necessary to produce something that has never been possible before. Many creative ideas are surprising not because they are unusual combinations but because they are completely new things. It is not enough to rearrange existing elements to be creative. If it was, a computer would by definition be creative because it combines input to produce a new output. Boden bases this idea on the evidence that humans can be creative without any environmental events or prior things to combine.

That creativity comes with doing things not possible before should be explained further. Within every field of thinking, whether it is language, science or every day actions they are subject to some rules. The language for instance has syntax; science has methodology and different fields. As long as new things are invented that keep these structures then they may at most be mere novel ideas. To be creative you would have to come up with something that is impossible according to these structures. These structures are a part of the conceptual space, which is a set of generative principles. That is how things may be investigated and modified. Creativity then comes partly from understanding the conceptual space because one may then easier investigate the limits and pathways of it. Further, creativity needs to extend the conceptual space beyond the prior structure and this is what is meant by producing something never possible before. One of Boden's examples is that children under a certain age have great difficulty drawing a one-armed man even though they easily draw a two-armed man. It simply depends on how evolved the conceptual-space is.

Creative acts extend the conceptual-space and thus produce situations that have never been possible before. Therefore lead to new concepts and the possibility of new creative actions.

What is the point in a creative computer: at its most genius it would produce things that we would neither understand nor fully appreciate. On the other hand including creativity in a computer might unravel some of the mysteries of human intelligence or at the lesser scale simply make the computer appear more believable. Boden is interested in this latter approach as she is not so much concerned with the philosophical questions as she is interested in whether things appear creative. She believes that computers could be creative as well if they where equipped with the ability to understand and investigate concepts.

It has been possible to make a computer program that improvises very simple jazz music or short melodies but with greater complexity the amount of memory used and computation time becomes the major problem. The solution could be to rely partly on combinational methods and partly on the conceptual methods described here. That is for instance genetic algorithms.

The subject of attention as a part of intellectual development has been examined by George Butterworth [22]. He studies human infants at different ages and how well they are at perceiving and displaying the same attention as on of their parents. The idea is that attention may hold a key to the origins of human cognition processes. Today developmental cognitive psychology is not widely used in AI but Butterworth believes this will change. The questions he asks have a relevant counter part in AI. How do babies know where someone else is looking or pointing and how does guiding the others attention correspond with social communication? In AI this would tell us both how the agent should perceive and interpret attention guiding gestures as input and also how this could be used as a basis for more advanced two-way communications.

Attention may be shared through the spatial signalling function of gaze and the postures humans may display with their hand, head, or even whole body. These serve as the basis for referential communication in humans. Humans may join visual attention by pointing at an object. To point is in Butterworth's sense to "see" for the other person. He believes that such shared experience is one of the bases to develop language.

He has carried out many laboratory experiments with small children. In the experiments one of the parents where instructed to interact normally with the child. On a given queue the parent would turn and pay attention to one of a set of target objects. The parent would not make any sound or point manually. The result was that the older an infant the more ability it had to follow the parent's attention. Very young infants (6 month old) where able to sense what direction the parent looked in but not if the target was behind it. Older infants would eventually possess more attention-sharing abilities and be able to both detect what target the parent gave attention and turn around if necessary. These are the three kinds of shared attention that Butterworth outlines, the later more advanced than the former:

Manual pointing is done with the index finger or the arm and so it is specific to the human anatomy. Comprehension of manual pointing is developed about the same time as other complex sign. Studies show that pointing is not better at directing attention than gaze is. Pointing is however more precise in determining the region of attention. Based on his empiric studies Butterworth concludes that sharing attention is not something that is learned but it is an inbred human-specific thing that automatically evolves with age. He beliefs both manual pointing and gazing it to be linked to language acquisition as they are referential function and thus a particular human type of social cognition. Conclusively communication networks are a fundamental part of humans and the way we think.

Among animals attention controlling abilities are also present. As humans attention helps in communicative situations to animals attention is specialised towards the behaviour of the different animals. For example birds are primarily aware of movement and dogs are particularly sensitive to sounds in the environment.

It is a bit difficult to see a direct connection to AI but if we aim at modelling a humanlike brain one would at least have to consider the role of attention. As Butterworth suggests attention-signalling behaviour is a kind of in-build ability humans have to reference to other objects. This could be the basis for relationships among individuals and social interactions.

One view on intelligence is that it is the ability to learn from experience. That is "more intelligent creatures are better able to learn than less intelligent creatures" [6] as Blumberg puts it. He suggests that learning is a fundamental capability of an autonomous mind. Learning is the agent's ability to modify its beliefs based on experience and it is thus a fundamental requirement for any individual that chooses actions as a direct consequence of desires and beliefs. By desires he means basic needs as hunger, thirst, social interaction etc. With belief he refers to how the individual believes the world is: what the individual perceives or imagines is true. I return to beliefs later in .

Learning is a very broad phenomenon. To clarify and focus Blumberg emphasises two fundamental kinds of things that individuals should be able to learn:

To model learning Blumberg looks at machine learning, or more precisely reinforcement learning. The individual always has a current state that is "a specific, and hopefully unique, configuration of the world as sensed by the creature's sensory system" [6]. At each state there are a set of different actions to choose and thus the individual has a multitude of state-action pairs. The problem is then to find a sequence of actions that lead to the goal state with as few negative side effects as possible, that is: the agent should learn a control policy for choosing actions that achieve its goal. For example, a hungry agent will have to goal to eat food. Such a goal is defined by a reward function that assigns a reward, numerical value, to each distinct action the agent may take from each distinct state.

Each time an action is performed a reward or penalty is received that indicates the desirability of the resulting state. To begin with, the agent only gets a reward when it reaches a goal state and the purpose of the reinforcement learning is then for the agent to learn predicted future rewards at intermediate states.

One way to find these predicted reward values is by Q-learning [50]. The strength in Q-learning is that it can acquire optimal control strategies, even when the outcomes of actions are unknown because it does not make any assumptions about the actions.

The following equation is the recursive definition of Q-learning:

![]()

Here the value of Q(s,a) is the reward received immediately when action a is executed in state s. The immediate reward for executing action

a from state s is noted as r(s,a).

This is for instance when the agent enters the goal state. The second part of

the equation represents the value of the optimal next step from here: ![]() denotes the state resulting from applying action a to state s. a' is a variable that runs over all possible actions and thus

denotes the state resulting from applying action a to state s. a' is a variable that runs over all possible actions and thus ![]() is the maximum value of any next possible state. The variable

is the maximum value of any next possible state. The variable![]() is the delay factor and lies between 0 and 1. It determines

how fast the rewards decline and so more direct ways to the goal will have the

higher accumulated values.

is the delay factor and lies between 0 and 1. It determines

how fast the rewards decline and so more direct ways to the goal will have the

higher accumulated values.

All put together, the equation above will determine a reward-value for each possible action executed from each possible state. The agent should then choose the action that has the highest reward-value because the cumulative further reward will be greater from this.

In practice, the previous calculated reward-values may be reused when investigating other initial states and actions. This is described in the Q-learning algorithm below, where Q(s,a) should be regarded as a lookup table that has an entry holding the reward-value for each possible state-action pair.

|

For each s, a initialise table entry Q(s,a) to 0. Begin in state s. Forever: Select one of the possible actions a and execute it. This leads to state s'. Receive immediate reward r. Update

the table entry for Q(s,a):

|

The variables here stand for the same as in the equation above. The "update"-arrows mean that the data on the left side is overwritten by the data on the right side.

The idea in the algorithm is that the values in the lookup table Q(s,a) are constantly updated. In this way they will converge more and more towards the optimum. The tricky part is how to select which action to execute. This could of course be done randomly but with some knowledge about the environment and actions it is often possible to come up with better strategies.

In our every day life emotions play a large role and perhaps even larger than rational thought. Therefore it seems necessary to include some theories of emotions in this project. This part covers some modern theories on emotions that may be beneficial to AI. The theories I describe are all mayor contributors to emotional AI but they have also bee selected due to relevance in my project.

Nico H. Frijda's book, The Emotions [36], was one of the first and still is one of the best attempts to get a hold on what emotions are. In this part I present a selected survey of Frijda's ideas. The emphasis is on the definition of emotions and how Frijda believes they work with behaviours. First I try to clarify what emotions are in the psychological sense. Then I partition the subjects into emotional behaviour based on logic and illogical behaviour due to emotions. Next I describe Frijda's view of how emotions evolve over time. Finally some broader perspectives are drawn up.

In the book Frijda is only concerned with

emotions in modern humans and does not dig deeply into the evolutionary aspects

of how emotions have come about. However he does mention

I use Frijda a lot in this text because I base my project on his Will model described in the next chapter.

The everyday usage of the term emotion is a bit vague. Is hunger for instance an emotion? The simple answer is that emotions are the expressions we would normally perceive from other people, such as fear, anger, and happiness. Frijda is looking for a better and more precise definition of emotions. What he reckons is that people under the influence of some of these phenomena called emotions act differently than you would expect them to do from a purely logical sense. This is when people begin to act due to some internal mechanism instead of as a response to the environment. It is also the case when people seem to choose inefficient solutions to situations when more efficient ones are both at hand and clearly visible. This latter should be compared to Dennett's Popperian creatures that are able to choose the better of two behaviours to reach the same goal (explained in 2.2.3.3.2.3). Thus Frijda's goal is to find a more useful definition of emotions and his method is to investigate and categorise emotions with no reference to the diffuse everyday usage of the term.

Frijda has his own term for the urge to do a specific action, namely action readiness. Emotional experience is defined as an awareness of action readiness of a passive and action-control-demanding environment. This includes action readiness to change or maintain relationships with the environment.

There are two viewpoints on emotions according to Frijda. There is the one where emotions are the result of some multidimensional state space. One of the axes in such a space could be pleasantness-unpleasantness. With such a model the emotions we define, such as anger and sadness, are only diffuse regions of the space: there are no clear boundaries. The second viewpoint on emotions considers them a set of discrete emotions and their combinations. That is, emotions are viewed as separate instances with no apparent connection to each other. Even though these two views initially seem very apart Frijda argues that the differences in fact are minor. The discrete attempt brings about specific emotions that may be sorted due to their similarities and differences. For instance hope and joy are close compared to the openness of the experiencing subject but far apart compared by certainty. In this way a multidimensional array may be set up with the discrete emotions all sorted into place. As these discrete emotions may be combined the picture now begins to look more like the first viewpoint where the emotions are located as regions in a continuous space.

On the other hand desires, enjoyments are examples of emotional states cannot be considered to form a class of emotions on the same plane as emotions like anger and fear, as they are less articulate. Still they make up a large share of human emotional responses. These are the core components: what make or do not make a situation an emotional one as they determine the emotional relevance. Besides core components there are context components and object components.

Context components are action relevance components as they determine how the individual feels he may act in the situation. Context components thus may work as an inhibitor for the core components if a situation seems too difficult or dangerous to react on. Examples of context components are certainty, strangeness and change.

Emotional experience and the responding emotional behaviour also depend on the emotional objects besides the events. These are object components such as jealousy and envy.

Some believe that to show emotions is

actually preparations for action. As you drop your jaw in astonishment or show

teeth to a foe you are actually mobilising your muscles so they are ready when

you have to take action. This is in some cases a reasonable explanation for the

reaction patterns we have to emotions but it seems to fall short to explain all

emotions.

Emotions often lead to relational activity: it changes the relationship between subject and object. Where non-emotional behaviour mostly is about changing the environment or inspecting the object of attention, emotional behaviour primarily leads to change of the individual's state. This is a self-centred behaviour where the purpose is to alter the perceived input without any regard to whether the relationship to the object has actually been changed. Thus when we close our eyes towards a danger this emotional behaviour gives a sense of the problem going away. -Unfortunately, when we open our eyes the monster is still there.

Some expressive behaviour clearly has a purpose. For instance a loud sound instinctively closes our eyes.

Our focus of attention is very important for the interpretation of behaviour. I mentioned above how people close their eyes then presented with a fearsome object. This is one extreme reaction, simply to close ones eyes. The other extreme is to stare wildly at the object as a very angry person would. The gaze is just one of the functions people have to control what stimuli we perceive (gazing and attention is also investigated in 2.3.3). The bodily posture also works to inhibit or enhance stimuli. A person who fears something will also tend to hold his arm together and perhaps even sit down and bow his head. This give further a sense of comfort as it keeps the dangerous stimuli out and protects vital body parts.

A person in deep concentration has a steady stare and a lowered forehead with eyebrows that almost blocks the line of sight. Frijda proposes that this should have a purpose as it helps keep other stimuli out of mind. This is an example of a directional emotional behaviour that has a clear subject.

Humans know how to read the emotions from others by recognising their behaviour and expressions but Frijda believes it works the other way around too. His idea is that some expressions and behaviours have come to be the way they are due to how other people react to them. These are known as interactive expressions because they are designed to send a signal to the observers. An example of an interactive expression is the angry stare. It is specifically directed towards one person and somehow has an intimidating effect on the victim. The fixed stare in itself is not logic. If you are in a combat situation your gaze ought to wander around to spot other enemies that might also engage in the fight. But the drastic bold behaviour of walking straight at you opponent while staring intensely into his eyes is a logical move to frighten the victim off. Other examples on interactive expressions are direct attempts to get attention through means such as crying or screaming. When a monster jumps out of a bush and you scream it makes good sense as you are actually shouting for help but still it does not make the monster go away.

The first is that stimulus are generalised so it results in behaviour in relation to some idealised object. This means that the person choose the behaviour that he believes is right for the situation but his idealised view of the situation might be wrong. The person then reacts oddly because his image of the situation simply is too blurred to come up with a better response.

The second reason why some improper behaviour may arise is due to the "shoot first, ask questions later" -principle. The idea is here to overreact without regard to further details which could have proven the behaviour useless.

Examples of specific emotions are for instance joy and excitement. Joy is a strange emotion that Frijda has difficulty categorising. Frijda describes joy as an "excess of movement" but apparently joy behaviour has no purpose itself. It is a mood that influences other behaviour as it boosts action-readiness for all other relational activity. Moods are then emotions without specific subject-object relationships. Contrary to joy, excitement has blocking behaviours. It is also a boost of action-readiness but only for actions related to one specific event. Thus in Frijda's terms excitement is not a mood (Gasper and Clore, 2.4.3, also has an alike mood definition).

For an observer it is easy to read the intensity of an emotion. A person may be at rest, still, or calm for instance. The intensity of emotions differs not just from one situation to the other but the intensity of an emotion also changes over time and due to new events. Frijda suggests that response patterns are "used up". That is they decay over time. A real emotion is thus not a phenomenon that discretely pops-up and disappears again but it rather fades away. An example is a fake smile compared to a genuine one. The fake smile will disappear instantly after its course has disappeared where the real smile hangs about for some time after. Another thing Frijda emphasises is the fact that once an emotion has begun it may be hard to stop again. As an emotion stays about once it has emerged it is very hard to control further flow to it. Thus if a person allows himself just a single tear it seems almost impossible to stop the rest from coming as well. This is of course because the person has kept some of his emotions unattended or inhibited. Reactions such as yawning or even emotional states may also be influenced by the surroundings. If one person starts to yawn then the other people around him are likely to do it as well.

When Frijda refers to inhibition he means the human capacity not to let go of ones emotions. That is to inhibit emotions through self-control. Without this capability people would be slaves to their emotions and never get to do anything but what they "feel like". Perhaps people would die of hunger because they would complain about the hunger instead of finding something to eat. Thus the individual always has an internal flux of emotions. In this sense flux is changes in relationships between the individual and the environment as Lazarus puts it (after this survey of Frijda's theories I look at Lazarus [41]). Frijda does not discuss if people could live without emotions.

As emotions are represented in time they may also change with time. A long lasting disgust may with time change character and become hatred, not because the relationship between the individual and object has changed but solely because of the duration. These instances, what Frijda calls responding and transaction are due to a non-changed event. So it is not the emotion that changes but the influence of the event that change over time. This is important because we then have two different emotions working at the same time, if we stick to the disgust-hate example. What happens is that the emotion of disgust lives its own life, either fading over time or getting strengthen due to the unchanged event. At the same time the hate towards the object is nurtured as a result of the time span. If the disgust fades away or the event stops (the disgusting object is removed) the person is left with hate towards the object. In effect an individual that has found disgust in one object might experience hatred the next time he encounters such an object.

Conclusively emotions may grow or shrink in intensity over time. The time span itself in which an event takes place may nurture new emotions that are perhaps at the given time "invisible" because the primary emotion is far stronger. Once the event is over and the primary emotion vanishes the individual might be left with a longer lasting secondary emotion.

There are many different ways to reach a goal. Some are learned within the life span and others are in the genes as "primitive" behaviours. Dennett would say that these "primitive" behaviours are "smart" moves that have moved into the genes. It seems that emotions are partly genetic and partly learned as children and blind people do not have the same expressive gestures as other people. The ability to learn and communicate emotions means that we do not have to experience things first hand in order to understand them and adopt their response patterns.

In the best Darwinian sense if we have inbred emotions they must have given us some kind of evolutionary advantage towards people with no emotions. Frijda concludes that if emotions are a part of biology their main purpose is to help the individual survive. Some of these survival advantages are emotions to cope. These emotions have the purpose of helping people comprehend extreme situations that would otherwise have been outsides their conceptual scope. This is when things happen that are more vicious or complicated than imaginable or simply does not have any logical explanation. Then for the person not to break down or ignore the event the emotions to cope give some sort of response pattern.

This is one example of emotions that directly takes over the behaviour of the individual but emotions also influence the daily cognitive processes in our brain. Memory and thoughts are also influenced by emotions, as Frijda puts it: "Under appropriate conditions, implausible stories are considered possible or likely if they foster hope or hate; dubious sources of information are believed" [36]. This introduces the concept of belief as a partly emotionally governed focus on information. Belief is a mayor subject in psychology and I will try to clarify this in .

Frijda sees emotions as several processes growing and shrinking independently and not as some undefined substance. His idea of action readiness sounds very much like parameters in a machine (or a computer simulation) that raise and lower likelihood of some mechanism getting started. Because emotions may be combined to fill a continuous state space the number of different discrete emotions one is working with is not of great importance. In modelling human emotions this means that if basic emotions and combinations are supported then when we have a very suitable picture.

To make believable agents showing expressions is very important. I did not go into Frijda's details on what emotion yields which expressions but some general characteristics have been lined out; emotions have both communicational and behavioural purposes. As a means of communication, mimicked emotions may be used to deceive others. Such fake expressions "fall off" once the deception is over in contrast to real emotions that tend to decline with time.

I have described Frijda's theories on emotions in this part of the text. In the next chapter I describe the Will architecture based on these ideas.

The role of cognition in emotion has had an ever-increasing importance in emotional science. Lazarus [41] (1991 ) introduced "cognitive emotion theory". Within this theory, beliefs are viewed as an important part of emotions. Emotions are defined as "states that comprise feelings, physiological changes, expressive behaviour, and inclinations to act" by Frijda et al. They define beliefs: "Beliefs can be defined as states that link a person or group or object or concept with one or more attributes, and this is held by the believer as true" . This is emphasised further in "appraisal theory" that says: "emotions result from how the individual believes the world to be, how events are believed to have come about, and what implications events are believed to have" .

Beliefs also influence emotions, which are agreed upon throughout science, but the other way, that emotions affect beliefs has not been studied so much. -Even though people for a long time have known the influence emotions may have on beliefs, for example Aristotle: "the judgements we deliver are not the same when we are influenced by joy or sorrow, love or hate" [1].

The philosophy is that emotions help take the step from thinking to acting. This is not meant as a physiologic process as Edelman would describe it but more as an observational theory. The point is that in psychology the psychological reality is much more interesting than the "true" reality.

Lazarus presents a cognitive-motivational-relational theory in his book, Emotion and Adaptation [41]. This combined term refers to how motives to act on relations arise and are compared. In short Lazarus theory is that person-environment relations bear possible actions that have each their motivation. -The individual judges which actions have the strongest motivations and then choose these.

He describes the theory with five principles:

The system principle: emotional processes involve many interdependent variables. Thus no emotions can be described from one single variable.

The process principle refers to the changes in emotional values over time. These will move like waves, as the values are interdependent. On the other hand there is a structure principle so that the same emotions arise from the same person-environment relationships.

The developmental principle is that emotions change during early childhood and perhaps also later in life.

The specificity principle says that each emotion has its own emotional process.

The relational meaning principle states that each emotion has a relational-specific importance. This means that some emotions have higher value under some person-environment relations than other and that this is a result of the judgement of personal harm and benefits of the emotions under the given situation.

The emotional meaning of the person-environment relationships is determined through appraisal. The function of appraisal is to integrate the individual's personality with the environmental conditions. The result is relational meanings of how the events influence the person's well-being. As a result of the weights of personal harm and benefits an emotion is generated. This results in an action tendency, which is the equivalent to Frijda's action readiness. Thus appraisal involves decision-making components. This is primarily goal relevance and secondly appraisal depends on remembered relationships such as blame and credit, and it also depends on expectations and coping potential.

Goals are states that may be used as guidance for the behaviour of an individual. For instance the goal of economic success might influence an individual to save all his money and perhaps even steal from others. Not all people find the same goals equally attractive and even the same person might over time have different attractions towards goals. Therefore it is very difficult to definitely combine events with emotions.

Some goals are universally shared (based on the genes) and others are learned or the product of social development and vary a lot from group to group. Some societies treat things as desirable that other cultures would find worthless.

My definition of goals: a goal is any possible action to take. All individuals in the same situation will see the same set of possible goals. Every goal has a weight that determines how attractive it seems to the individual.

The self image, ego, is for Lazarus more than just consciousness. It is the entire mind and its conscious workings. The purpose of the ego is that the "ego organises motives and attitudes into hierarchies of importance, which may persist for a life time or a larger portion of it" [41]. Thus the ego is the mechanism that "weights" the goals and decides which goals should be the centre of activity and which goals should be suppressed.

The new thing Lazarus has come up with is the idea of how the individual automatically weights the possible emotions on the harm and good they could do. This has been widely adopted in the AI community as I describe in the next chapter.

Psychologists Gerald L. Clore and Karen Gasper present a view on emotions that combines emotional feelings with the conscious mind. They divide emotions in two parts, non-conscious appraisal processes and their feedback, the conscious emotional feeling. The latter gives the link to the conscious mind as it lets the individual reflect over its inner state. As the sub-title suggests it is a discussion of how feelings both emotional and physic influence thoughts. Compared to the previous theories described this is a more straightforward approach to emotional theory.

Appraisal in Clore and Gasper's world is used in the same sense as Lazarus, described earlier (2.4.2). It is a non-conscious process that feeds back likes or dislike for the specific observed state. This state is the subjective experience the individual has of the events and possibilities to act.

To introduce the concept of focusing they make a clear division between emotions and moods. Emotions are affective states that are aimed at a certain object. The focus of an emotion is narrowed towards this object in contrast to moods that on the other hand do not have any connection to an object. A mood is out of focus, using this metaphor. How narrow the focus is determines the intensity of the emotion towards the given object. This again emphasises the apparent importance laid into the object and as an ever-narrowing circle this may go on towards extreme emotional reactions.

The main idea in emotions is to control focus on objects to emphasise their importance either negatively or positively. Different emotions arise due to the differences in observed states. The objects have different goals that match differently with the individuals concerns. Here goals are meant as described in 2.4.2.1.1 and concerns should be seen as what things the agent has on his mind, what he is concerned about. That could be breathing or hunger.

The role of emotions is to act as an indicator for how to react in uncertain situations. Then the individual's feelings towards different situations show through their behaviour. When evaluating observed states people act as if they ask themselves, "how do I feel about it?" and thus decides on a response consistent with the emotional feelings. Thus the emotion has become information used in the individuals' decision making. In the terms of Frijda, Clore and Gasper suggest that appraisal data becomes privately assessable to the individual so he may consciously reflect over it. To complete the picture for example facial expressions and motor functions (see [28]) makes this kind of information accessible publicly. I return later this in chapter . This way of using feelings as information is called the affect-as-information-model by Clore and Gasper, as it describes the connection between emotional behaviour and appraisal.

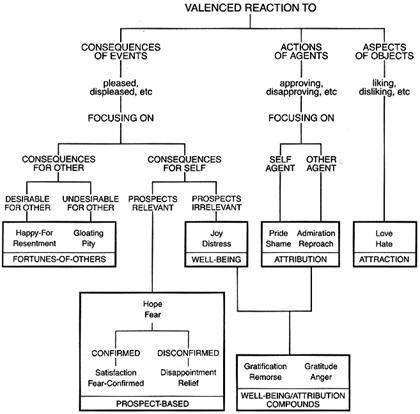

Figure 3 , Ortony, Clore, and Collins' typology of emotions ([25], p27).

Clore and Gasper believe that emotions implicitly represent a model of the situations in which it occurs. As shown in Figure 3, Ortony, Clore and Collins have mapped how observed states lead to 22 different emotions. Here there are three sources of values: goals, standards and attitude. These result in three affective reactions: desire, approval and liking. This is differentiated into the 22 emotions that thus represent the desirability or undesirability of the outcome of events relative to goals. Clore and Gasper would describe this structure as each emotion knowing its own place rather than the structure knowing where to put the emotions.

When an individual examines feedback from the appraisal processes active emotions will affect the read value. On a dark rainy day a bad mood will influence all appraisal feedback values to seem worse than they are. This could lead to other bad emotions and in the previously described circle motion hurl the individual into a deep depression.

Clore and Gasper do not distinguish emotional from physical feelings in the way that it leads to change in behaviour. The physical feeling however is often more concrete and easy to determine its cause. If one feels pain pinpointing where the pain comes from is usually much easier than if it is an affective feeling that has a psychic root rather than a physical origin.

Moods start as emotions where the focus has widened away from the object in an extreme degree. They do have a specific origin but as focus has widened the connection the subject fade and conclusively vanishes as the emotion turns into a mood. This is the explanation why it is so difficult solely with the experienced feeling with a mood to establish a connection between a mood and an object.

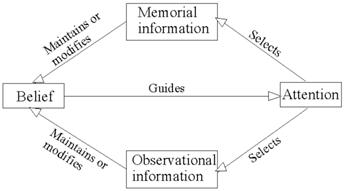

Clore and Gasper are aiming at how emotions have a role in beliefs. As explained by Dennett [11] the term belief is normally reserved for things as religious belief or political belief. But for many philosophers and theorists of cognitive science it means what you think is true. For example if you see something you would normally believe that it is where you see it. Also if you are about to open a door and presume it is unlocked, it is because you believe it is unlocked. Similarly in Clore and Gasper's sense beliefs are how the individual interprets an observed state. The beliefs thus guide the attention of the individual to different external or internal things. A belief is constructed from memorial and observational information as illustrated in the figure below.

Figure 4, the influence of attention and belief.

The figure shows two alternative cycles of for a belief that guides attention to either memorised information of the observed information. This again influences the belief and so the loop goes on.

The idea, called the attention hypothesis, is that intensity of feeling activates the attention processes, which are then guided partly by beliefs towards concept-relevant information. This means that the emotional effect on perception is that emotions guide attention towards object relevant information. Again this leads to change of behaviour and action selection. Then we have that emotions influence beliefs and thoughts and so indirectly change the way individuals behave compared to the former theories that describe how emotions more directly influence behaviour.

Clore and Gasper work with a very straightforward or even "cold" approach to emotions. They believe that emotions may be described entirely as effect and a set of situations that lead to this emotion. This is, that each possible emotion implicitly contains a model of which situations this emotion is experienced. This also brings about a very structured theory on emotions that seems very useful in AI-implementation sense.

In their theory they have a simple non-conscious process (appraisal) that decides good/bad for the current observed state. Then the individual reads this value as a feedback influenced by the feelings that are already in motion. This information is then available to the conscious cognitive processes and the individual may use it to decide how to behave.

Another interesting thing is how they mix affective feelings with physical ones. In this sense if you solve the one problem with this theory you also have the key to solve the other.

In Ronald de Sousa's book The Rationality of Emotion , he investigates two aspects of emotions. Firstly he inspects the role of emotions in the "acquisition of beliefs and desires" . Secondly he takes a more Darwinian perspective and asks what the rational functions of emotions are.

De Sousa's view on emotions role in modifying or adding behaviour is much like the other theorists I have described: emotions have their place in the relationship between the individual and an object. This is also the case between the individual and event so that emotions may be due to the actual situation and the one that will come. Such relationships are both inherited and may be learned just as Lazarus claims [41]. As Frijda, de Sousa sees that some emotions have some kind of time constraint so that they fade away. Here Frijda is more thorough and their points are the same.

To explain the rational purpose of emotions de Sousa believes that they are either psychological mechanisms or due to evolution. De Sousa points out that some scientists believe that emotions have evolved at a very early stage of our evolution, even before the reptile stage. The idea is that the brain is in fact several brains trying to control the individual in each their direction. This results in conflicts that we interpret as emotions.