Camera System - AD Convertor

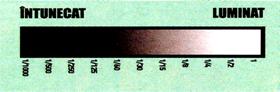

Sensors consist of pixels with photodiodes which convert the energy of the incoming photons into an electrical charge. That electrical charge is converted to a voltage which is amplified to a level at which it can be processed further by the Analog to Digital Converter (ADC). The ADC classifies ("samples") the analog voltages of the pixels into a number of discrete levels of brightness and assigns each level a binary label consisting of zeros and ones. A "one bit" ADC would classify the pixel values as either black (0) or white (1). A "two bit" ADC would categorize them into four (2^2) groups: black (00), white (11), and two levels in between (01 and 10). Most consumer digital cameras use 8 bit ADCs, allowing up to 256 (2^8) distinct values for the brightness of a single pixel.

This 8 bit Analog to Digital Converter (ADC) "samples" the analog voltages into 256 discrete levels which are assigned a binary label consisting of zeros and ones.

Digital SLR cameras have sensors with a higher dynamic range which are able to capture subtle tonal gradations in the shadow, midtone, and highlight areas of the scene. Because those sensors output pixel voltages with very minute voltage differences they are usually equipped with 10 or 12 bit ADCs which allow for more precise categorization into 1,024 or 4,096 discrete levels respectively. Normally such cameras offer the option to save the 10 or 12 bits of data per pixel in RAW because JPEG only allows 8 bits of data per channel.

Often, marketing material advertises the bitrate of the ADC to suggest the digital camera or scanner is able to output images with a high dynamic range. From the above it is easy to understand that this is only true IF the sensor itself has sufficient dynamic range. As shown in the table below, if the sensor has a low dynamic range to begin with, using an ADC with a higher bit rate will only help to increase sales to uninformed buyers.

|

|

ADC Type |

Image Tonal Range |

|

Low (e.g. around 256 tonal values) |

8 bit |

8 bit |

|

10 or 12 bit |

8 bit |

|

|

High (e.g. around 4,096 tonal values) |

8 bit |

8 bit |

|

10 or 12 bit |

10 or 12 bit if RAW |

Camera System - AF Assist Lamp

Some manufacturers fit their cameras with a lamp (normally located beside or above the lens barrel) which illuminates the subject you are focusing on when shooting in low light conditions. This lamp assists the camera's focusing system where other cameras autofocus will likely have failed. These lamps usually only work over a relatively short range, up to about 4 meters. Some lamps use infrared light instead of visible light which is better for "candid" shots where you don't want to startle the subject. Notable higher end external flash systems feature their own focus assist lamps with far greater range.

The focus assist lamp on this Canon PowerShot S50 is located above the lens and beside the flash. It serves a double purpose. Firstly it fires a beam of patterned white light in low light situations which helps the auto focus system to get a lock. Secondly, when the flash and anti-red- eye are enabled it remains lit for as long as you half-press the shutter release to reduce the size of the subject's pupils and thus reduce the chance of red eye.

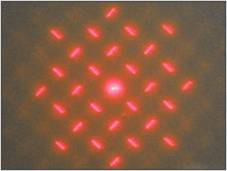

Hologram AF found on some Sony cameras works by projecting a crossed laser pattern onto the subject. This bright laser pattern helps the camera's contrast detect AF system to lock on to the subject. The system works well as long as the subject is large enough to be covered by several laser lines.

Camera System - AF Servo

Autofocus Servo refers to the camera's ability to continuously focus on a moving subject, a feature normally only found on digital SLRs. It is generally used by sports or wildlife photographers to keep a moving subject in focus.

Autofocus Servo is normally engaged by switching focus mode to "AI Servo" (Canon) or "Continuous" (Nikon) followed by half-pressing the shutter release. The camera will continue to focus based on its own focus rules (and your settings) while the shutter release is half-pressed or fully depressed (actually taking shots). It is worth noting that Autofocus Servo normally also puts the camera into "release priority" mode so that the camera will take a shot when the shutter release is depressed, regardless of the current AF status (good lock or still searching).

Camera System - Autofocus

All digital cameras come with autofocus (AF). In autofocus mode the camera automatically focuses on the subject in the focus area in the center of the LCD/viewfinder. Many prosumer and all professional digital cameras allow you to select additional autofocus areas which are indicated on the LCD/viewfinder.

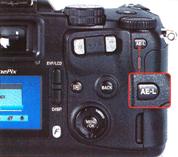

Example of a camera with a multi selector button (extreme right) to select the AF area spot. The selected area spot is indicated on the main LCD by a red bracket.

In "single AF" mode, the camera will focus when the shutter release button is pressed halfway. Some cameras offer "continuous AF" mode whereby the camera focuses continuously until you press the shutter release button halfway. This shortens the lag time, but reduces battery life. Normally a focus confirmation light will stop blinking once the subject in focus. Autofocus is usually based on detecting contrast and therefore works best on contrasty subjects and less well in low light conditions, in which case the use of an AF assist lamp is very useful. Some cameras also feature manual focus.

Camera System - Batteries

Most digital cameras use either rechargeable Lithium-ion batteries or rechargeable/disposable AAs.

Disposable AAs

Given the high power consumption of digital cameras, it is economically and environmentally unjustified to use disposable batteries other than in emergency situations when your rechargeables are depleted. Disposable Lithium AAs are more expensive than Alkalines, but having about three times the power packed in half the weight, they are ideal to carry with you as a backup.

Rechargeable AAs (NiCd and NiMH)

NiMH (Nickel Metal Hydride)

rechargeable AA batteries are much better than the older NiCd (Nickel Cadmium)

AAs. They have no "memory effect" (explained below) and are more than

twice as powerful. Capacities are constantly improving and differ per brand.

NiMH (Nickel Metal Hydride)

rechargeable AA batteries are much better than the older NiCd (Nickel Cadmium)

AAs. They have no "memory effect" (explained below) and are more than

twice as powerful. Capacities are constantly improving and differ per brand.

Rechargeable Lithium-ion Batteries

Li-ion (Lithium-ion) rechargeable batteries are lighter, more compact, but more expensive than NiMH batteries. They have no memory effect and always come in proprietary formats (there are no rechargeable Li-ion AAs). Some cameras also accept disposable Lithium batteries, such as 2CR5s or CR2s via an adapter, ideal for backup purposes.

Example of Lithium-ion battery and adapter to accommodate three CR2 Lithium batteries.

Charging

Fully charged batteries will gradually lose their charge, even when not used. So if you have not used your camera for a few weeks, make sure you bring a freshly charged battery along on your shootout. Charging NiCD batteries before they are fully discharged will reduce the maximum capacity of subsequent charges. As the effect gets stronger when repeated often, it is called "memory effect". It is therefore recommended to recharge the batteries only after they are fully depleted. To a lesser extent, this is also useful for NiMH or Lithium-ion batteries, although they have virtually no memory effect. Doing so will also increase the life span of the battery which is determined by the number of "charge-discharge" cycles that depends on the type and brand.

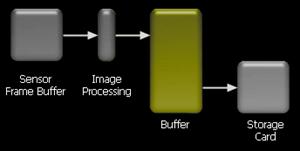

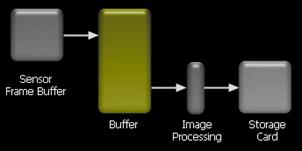

Camera System - Buffer

After the sensor is exposed, the image data will be processed in the camera and then written to the storage card. A buffer inside a digital camera consists of RAM memory which temporarily holds the image information before it is written out to storage card. This speeds up the "time between shots" and allows burst (continuous) shooting mode. The very first digital cameras didn't have any buffer, so after you took the shot you HAD to wait for the image to be written to the storage card before you could take the next shot. Currently, most digital cameras have relatively large buffers which allow them to operate as quickly as a film camera while writing data to the storage card in the background (without interrupting your abi 717g68h lity to shoot).

The location of the buffer within the camera system is normally not specified, but affects the number of images that can be shot in burst mode. The buffer memory is located either before or after the image processing.

After Image Processing Buffer

With this method the images are processed and turned into their final output format before they are placed in the buffer. As a consequence, the number of shots which can be taken in a burst can be increased by reducing image file size (e.g. shoot in JPEG, reduce JPEG quality, reduce resolution).

Before Image Processing Buffer

In this method no image processing is carried out and the RAW data from the CCD is placed immediately in the buffer. In parallel to other camera tasks, the RAW images are processed and written to the storage card. In cameras with this type of buffer, the number of frames which can be taken in burst mode cannot be increased by reducing image file size. But the number of frames per second (fps) is independent of the image processing speed (until the buffer is full).

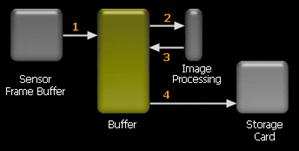

Smart Buffering

The "smart buffering" mentioned by Phil Askey in his Nikon D70 review, combines elements from the above two buffering methods. Just like in the "Before Image Processing Buffer" the unprocessed image data are stored into the buffer (1) allowing for a higher fps. They are then processed (2) and converted into JPEG, TIFF or RAW. But instead of writing the processed images to the storage card they are stored in the buffer (3). Therefore, the image processing is not bottlenecked by the writing to the storage card, which happens in parallel. Moreover, it constantly frees up buffer space for new images since (3) takes up less space than (2), especially in the case of JPEG. Just like in the "After Image Processing Buffer", the output images are then written from the buffer to the storage card (4). But an important difference is that here the image processing happens in parallel with writing to the storage card. So the image processing of new images can continue while the other images are being written to the storage card. This means that you do not necessarily have to wait for the entire burst of frames to be written to the CF card before there is enough space to take another full burst.

Camera System - Burst (Continuous)

Burst or Continuous Shooting mode is the digital camera's ability to take several shots immediately one after another, similar to a film SLR camera with a motorwind. The speed (number of frames per second or fps) and total number of frames differs greatly between camera types and models. The fps is a function of the shutter release and image processing systems of the camera. The number of frames that can be taken is defined by the size of the buffer where images are stored before they are processed (in case of a before image processing buffer) and written to the storage card.

The number of frames per second (fps) and total number of frames that can be shot in burst mode is continuously improving and is of course higher as you move from consumer and prosumer digital compacts to prosumer and professional digital SLRs. Digital compacts typically allow 1 to 3 fps with bursts of up to about 10 images while digital SLRs have fps of up to 7 or more and can shoot dozens of frames in JPEG and RAW. Some even allow an initial burst of higher fps followed by a slower but continuous fps until the storage card is full.

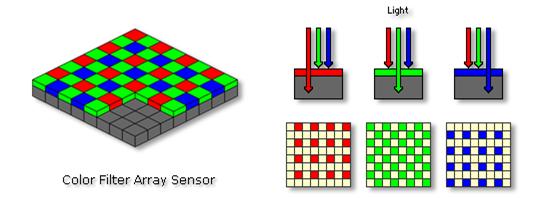

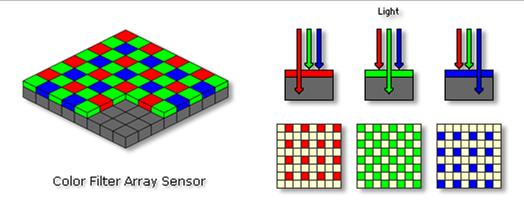

Camera System - Color Filter Array

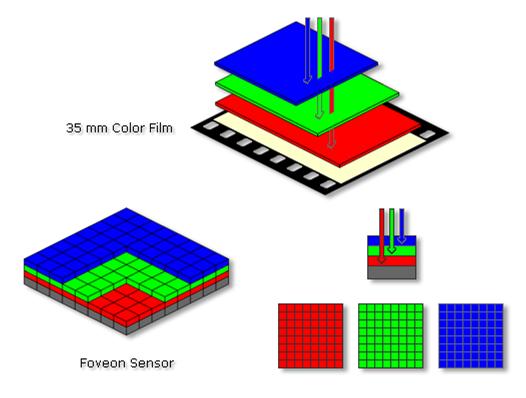

Each "pixel" on a digital camera sensor contains a light sensitive photo diode which measures the brightness of light. Because photodiodes are monochrome devices, they are unable to tell the difference between different wavelengths of light. Therefore, a "mosaic" pattern of color filters, a color filter array (CFA), is positioned on top of the sensor to filter out the red, green, and blue components of light falling onto it. The GRGB Bayer Pattern shown in this diagram is the most common CFA used.

Mosaic sensors with a GRGB CFA capture only 25% of the red and blue and just 50% of the green components of light.

Red channel pixels Green channel pixels Blue channel pixels Combined image

(25% of the pixels) (50% of the pixels) (25% of the pixels)

As you can see, the combined image isn't quite what we'd expect but is sufficient to distinguish the colors of the individual items in the scene. If you squint your eyes or stand away from your monitor your eyes will combine the individual red, green, and blue intensities to produce a (dim) color image.

Red, Green, and Blue channels after interpolation Combined image

The missing pixels in each color layer are estimated based on the values of the neighboring pixels and other color channels via the demosaicing algorithms in the camera. Combining these complete (but partially estimated) layers will lead to a surprisingly accurate combined image with three color values for each pixel.

Many other types of color filter arrays exist, such as CYGM using CYAN, YELLOW, GREEN, and MAGENTA filters in equal numbers, the RGBE found in Sony's DSC-F828, etc.

Camera System - Connectivity

A digital camera's connectivity defines how it can be connected to other devices for the transfer, viewing, or printing of images, and to use the camera for remote capture.

Image Transfer

Early digital

cameras used slow RS232 (serial) connections to transfer images to your

computer. Most digital cameras now feature USB 1.1 connectivity, with higher

end models offering USB 2.0 and FireWire (IEEE 1394) connectivity.

Manufacturers generally bundle such cameras with cables and driver software.

Note that real transfer rates are always lower than the theoretical transfer

rates indicated in the table below. Practical transfer speeds depend on your

computer hardware and software configuration, the type of camera or reader, the

type and quality of the storage card, whether you are reading or

writing (reading is faster than writing), the average file size (a few large

files transfer faster than many small ones), etc.

Instead of connecting the camera with a cable to your computer you can also

insert the storage card into the PC Card slot of your notebook or a dedicated

card-reader.

|

Theoretical Transfer Speeds |

Transfer Rate |

|

|

USB 2.0 - Low-Speed = USB 1.1 Minimum |

1.5 Mbps |

|

|

USB 2.0 - Full-Speed = USB 1.1 Maximum |

12 Mbps |

|

|

USB 2.0 - High-Speed |

480 Mbps |

|

|

FireWire/IEEE1394 |

100-400 Mbps |

|

|

Practical Transfer Speeds |

Approx. Transfer Rate |

|

|

Digital Camera USB 1.1 |

~ 350 KB/s |

|

|

Digital Camera FireWire |

~ 500 KB/s |

|

|

USB 1.1 Card Reader |

~ 900 KB/s |

~ 7 Mbps |

|

PC/PCMCIA Card Slot on notebook |

~ 1,300 KB/s |

~ 10 Mbps |

|

USB 2.0 or FireWire Card Reader |

~ 3,200 KB/s |

~ 25 Mbps |

A transfer rate of 1 Megabit per second (Mbps) equals 128 Kilobytes per second (KB/s) and is able to transfer 7.5 Megabytes of information per minute or about four 5 megapixel JPEG images.

Remote Capture

On some cameras, the connection to transfer images can also be used for remote capture and time lapse applications.

Video Output

Most digital cameras also provide video (and sometimes audio) output for connection to a TV or VCR. More flexible cameras allow you to switch output between the PAL and NTSC video standards. Cameras with infrared remote controls make it easy to do slideshows for friends and family from the comfort of your armchair.

Print Output

Some digital cameras, e.g. those with PictBridge and USB Direct Print support, allow you to print images directly from the camera to an enabled printer via a USB cable without the need for a computer. Although printing directly from a digital camera is convenient, it eliminates one of the key benefits of digital imaging-the ability to edit and optimize your images.

Camera System - Effective Pixels

Effective Number of Pixels

A distinction should be made between the number of pixels in a digital image and the number of sensor pixel measurements that were used to produce that image. In conventional sensors, each pixel has one photodiode which corresponds with one pixel in the image. A conventional sensor in for instance a 5 megapixel camera which outputs 2,560 x 1,920 images has an equal number of "effective" pixels, 4.9 million to be precise. Additional pixels surrounding the effective area are used for demosaicing the edge pixels, to determine "what black is", etc. Sometimes not even all sensor pixels are used. A classical example was Sony's DSC-F505V which effectively used only 2.6 megapixel (1,856 x 1,392) out of the 3.34 megapixel available on the sensor. This was because Sony fitted the then new 3.34 sensor into the body of the previous model. As the sensor was slightly larger, the lens was not able to cover the whole sensor.

So the total number of pixels on the sensor is larger than the effective number of pixels used to create the output image. Often this higher number is preferred to specify the resolution of the camera for marketing purposes.

Interpolated Number of Sensor Pixels

Normally, each pixel in the image is based on the measurement in one pixel location. For instance, a 5 megapixel image is based on 5 million pixel measurements, give and take the use of some pixels surrounding the effective area. Sometimes a camera with, for instance, a 3 megapixel sensor, is able to create 6 megapixel images. Here, the camera calculates, or interpolates, 6 million pixels of information based on the measurement of 3 million effective pixels on sensor. When shooting in JPEG mode, this in-camera enlargement is of better quality than those performed on your computer because it is done before JPEG compression is applied. Enlarging JPEG images on your computer also makes the undesirable JPEG compression artifacts more visible. However, the quality difference is marginal and you are basically dealing with a slower 3 megapixel camera which fills up your memory cards twice as fast-not a good trade-off. It is similar to what happens when you use a digital zoom. Interpolation cannot create detail you did not capture.

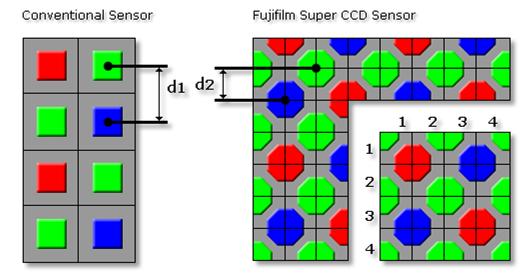

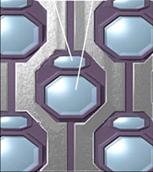

Fujifilm's Super CCD Sensors

Normally sensor pixels are square. Fujifilm's Super CCD sensors have octagonal pixels, as shown in this diagram. Therefore, the distance "d2" between the centers of two octagonal pixels is smaller than the distance "d1" between two conventional square pixels, resulting in larger (better) pixels.

However, the information has to be converted to a digital image with square pixels. From the diagram you can see that, for a 4 x 4 area of 16 square pixels, only 8 octagonal pixel measurements were used: 2 red pixels, 2 blue pixels, and 4 green pixels (1 full, 4 half, and 4 quarter green pixels). In other words, 6 megapixel Super CCD images are based on the measurement by only 3 million effective pixels, similar to the above interpolated example, but with the advantage of larger pixels. In practice the resulting image quality is equivalent to about 4 megapixel. The drawback is that you have to deal with double the file size (leading to more storage and slower processing), while enjoying a quality improvement equivalent to only 33% more pixels.

Camera System - EXIF

Besides information about the pixels of the image, most cameras store additional information such as the date and time the image was taken, aperture, shutterspeed, ISO, and most other camera settings. These data, also known as "metadata" are stored in a "header". A common type of header is the EXIF (Exchangeable Image File) header. EXIF is a standard for storing information created by JEIDA (Japan Electronic Industry Development Association) to encourage interoperability between imaging devices. EXIF data are very useful because you do not need to worry about remembering the settings you used when taking the image. Later you can then analyze on your computer which camera settings created the best results, so you can learn from your experience.

Most current image editing and viewing programs are able to display, and even edit the EXIF data. Note that EXIF data may be lost when saving a file after editing. It's one of the many reasons you should always preserve your original image and use "Save As" after editing it.

Example of EXIF 2.2 information extracted with ACDSee 6.0.3 which allows the data preceded by the "pencil" icon to be edited.

Camera System - Fill Factor

The fill factor indicates the size of the light sensitive photodiode relative to the surface of the pixel. Because of the extra electronics required around each pixel the "fill factor" tends to be quite small, especially for Active Pixel Sensors which have more per pixel circuitry. To overcome this limitation, often an array of microlenses is placed on top of the sensor.

Camera System - Lag Time

Lag time is the time between you pressing the shutter release button and the camera actually taking the shot. This delay varies quite a bit between camera models, and used to be the biggest drawback of digital photography. The latest digital cameras, especially the prosumer and professional SLR's have virtually no lag times and react in the same way as conventional film cameras, even in burst mode.

In our reviews we record "Lag Time" and define it as three distinct timings:

|

Autofocus Lag (Half-press Lag) Many digital camera users prime the autofocus (AF) and autoexposure (AE) systems on their camera by half-pressing the shutter release. This lag is the amount of time between a half-press of the shutter release and the camera indicating an autofocus and autoexposure lock on the LCD/viewfinder (ready to shoot). This timing is normally the most variable as it is affected by the subject matter, current focus position, still or moving subject, etc. |

|

|

Shutter Release Lag (Half to Full-press Lag) The amount of time it takes to take the shot (assuming you have already primed the camera with a half-press) by pressing the shutter release button all the way down to take the shot. |

|

|

Total Lag (Full-press Lag) The amount of time it takes from a full depression of the shutter release button (without performing a half-press of the shutter release) to the image being taken. This is more representative of the use of the camera in a spur of the moment "point and shoot" situation. The Total Lag is not equal to the sum of the Autofocus and Shutter Release Lags. |

|

Camera System - LCD

LCD as Viewfinder

Digital compact cameras allow you to use the LCD as a viewfinder by providing a live video feed of the scene to be captured. The LCDs normally measure between 1.5" and 2.5" diagonally with typical resolutions between 120,000 and 240,000 pixels. The better LCDs have an anti-reflective coating and/or a reflective sheet behind the LCD to allow for viewing in bright outdoor daylight. Some LCDs can be flipped out of the body or angled up or down to make it easier to take low angle or high angle shots. The main LCD is sometimes supplemented by an electronic viewfinder which uses a smaller 0.5" LCD, simulating the effect of a TTL optical viewfinder. LCDs on digital SLRs normally do not support live previews and are only used to review images and change the camera settings.

Digital compact with a twist LCD Fixed LCD on a digital SLR

LCD to Play Back Images

The LCD screen delivers one of the key benefits of digital photography: the ability to play back your images immediately after shooting. However, since only about 120,000 to 240,000 pixels are used to represent several millions of pixels in the original digital image, further magnification is needed to determine whether the image is sufficiently sharp and needs reshooting. Not all cameras offer magnification and the magnification factor differs per model. Some cameras allow basic editing functions such as rotating, resizing images, trimming video clips, etc. In playback mode you can also select an image from the thumbnail index.

Besides playback, many cameras allow you to

"scroll" through the EXIF data, view the histogram,

and even show areas with potential for overexposure, as shown in this

animation.

Besides playback, many cameras allow you to

"scroll" through the EXIF data, view the histogram,

and even show areas with potential for overexposure, as shown in this

animation.

LCD Used as Menu

The LCD is also used to change the camera settings via the camera buttons, often allowing to adjust the brightness and color settings of the LCD itself. The main LCD is frequently supplemented by one or more monochrome LCDs (which use less battery power) on top and/or at the rear of the camera showing the most important camera and exposure settings.

Menu system displayed Example of a monochrome status LCD providing

by the LCD information such as battery and storage card

status, exposure, focus mode, white balance, etc. Often a backlight can be activated via a button.

Camera System - Manual Focus

Manual focus disables the camera's built-in automatic focus system so you can focus the lens by hand (*). Manual focus is useful for low light, macro or special effects photography. It is very important when the autofocus system is unable to get a good focus lock, e.g. in low light situations. Note that some digital cameras allow you to manually focus only to a few preset distances. Higher-end digital cameras allow focusing using the normal focus ring on the attached lens, just like in conventional photography.

(*) In digital cameras, manual focus is often implemented on a fly-by-wire basis, whereby the manual inputs to focus in or out are relayed to the autofocus system which effects the change in focus.

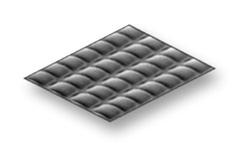

Camera System - Microlenses

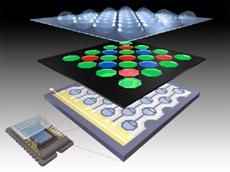

To overcome the limitations of a low fill factor, on certain sensors an array of microlenses is placed on top of the color filter array in order to funnel the photons of a larger area into the smaller area of the light sensitive photodiode.

Microlens funnels the light of a Electron microscope image of

larger area into the photodiode real microlenses

(indicated in red) of the pixe

Camera System - Pixel Quality

The marketing race for "more megapixels" would like us to believe that "more is better". Unfortunately, it's not that simple. The number of pixels is only one of many factors affecting image quality and more pixels is not always better. The quality of a pixel value can be described in terms of geometrical accuracy, color accuracy, dynamic range, noise, and artifacts. The quality of a pixel value depends on the number of photodetectors that were used to determine it, the quality of the lens and sensor combination, the size of the photodiode(s), the quality of the camera components, the level of sophistication of the in-camera imaging processing software, the image file format used to store it, etc. Different sensor and camera designs make different compromises.

Geometrical Accuracy

Geometrical or spatial accuracy is related to the number of pixel locations on the sensor and the ability of the lens to match the sensor resolution. The resolution topic explains how this is measured at this site. Interpolation will not improve geometrical accuracy as it cannot create what was not captured.

Color Accuracy

Conventional sensors using a color filter array have only one photodiode per pixel location and will display some color inaccuracies around the edges because the missing pixels in each color channel are estimated based on demosaicing algorithms. Increasing the number of pixel locations on the sensor will reduce the visibility of these artifacts. Foveon sensors have three photodetectors per pixel location and create therefore a higher color accuracy by eliminating the demosaicing artifacts. Unfortunately their sensitivities are currently lower than conventional sensors and the technology is only available in a few cameras.

The size of the pixel location and the fill factor determine the size of the photodiode and this has a big impact on the dynamic range. Higher quality sensors are more accurate and will be able to output a larger dynamic range which can be preserved when storing the pixel values into a RAW image file. A variant of the Fujifilm Super CCD, the Super CCD SR uses two photodiodes per pixel location with the objective to increase the dynamic range. A more sensitive photodiode measures the shadows, while a less sensitive photodiode measures the highlights.

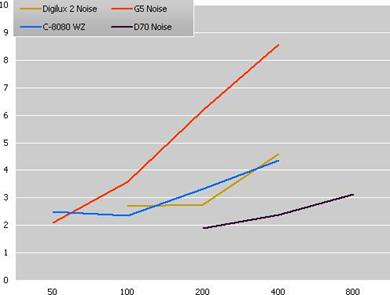

Noise

The pixel value consists of two components:

(1) what you want to see (the actual measurement of the value in the scene)

(2) what you do not want to see (noise).

The higher (1), and the lower (2), the better the quality of the pixel. The quality of the sensor and the size of its pixel locations have a great impact on noise and how it changes with increasing sensitivity.

Artifacts

Besides noise, there are many other types of artifacts that determine pixel quality.

Conclusion

Unfortunately there is no single standard objective quality number to compare image quality across different types of sensors and cameras. For instance, a 3 megapixel Foveon type sensor uses 9 million photodetectors in 3 million pixel locations. The resulting quality is higher than a 3 megapixel but lower than a 9 megapixel conventional image and it also depends on the ISO level you compare it at. Likewise, a 6 megapixel Fujifilm Super CCD image is based on measurements in 3 million pixel locations. The quality is higher than a 3 megapixel image but lower than a 6 megapixel image. A 6 megapixel digital compact image will be of lower quality than a 6 megapixel digital SLR image with larger pixels. To determine an "equivalent" resolution is tricky at best.

End of the day, the most important thing is that you are happy with the quality level that comes out of your camera for the purpose that you need it for (e.g. website, viewing on computer, printing, enlargements, publishing, etc.). I strongly recommend that you look beyond megapixels when purchasing a digital camera.

Camera System - Pixels

Sensor Pixels

Similar to an array of buckets collecting rain water, digital sensors consist of an array of "pixels" collecting photons, the minute energy packets of which light consists. The number of photons collected in each pixel is converted into an electrical charge by the light sensitive photodiode. This charge is then converted into a voltage, amplified, and converted to a digital value via the analog to digital converter, so that the camera can process the values into the final digital image.

As explained in the sensor sizes topic, sensors of digital compact cameras are substantially smaller than those of digital SLRs with a similar pixel count. As a consequence, the pixel size is substantially smaller. This explains the lower image quality of digital compact cameras, especially in terms of noise and dynamic range.

Typical sensor size of 3, 4, Typical sensor size of 6

and 5 megapixel digital megapixel digital SLRs

compact cameras

Typical pixel size of 4 megapixel compacts and 6 megapixel SLRs

Digital Image Pixels

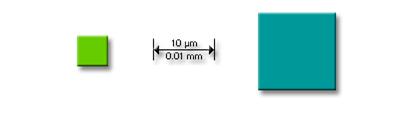

A digital image is similar to a spreadsheet with rows and columns which stores the pixel values generated by the sensor. Pixels in a digital image have no size until they are displayed on a monitor or printed. For instance, on a 4" x 6" print, each pixel in a 5 megapixel image would only measure 0.01mm, while on an 8" x 10" print, it will measure 0.05mm.

Camera System - Sensor Size

Typical sensor size of 3, 4, Typical sensor size of 6

and 5 megapixel digital megapixel digital SLRs

compact cameras

This diagram shows the typical sensor sizes compared to 35mm film. The sensor sizes of digital SLRs are typically 40% to 100% of the surface of 35mm film. Digital compact cameras have substantially smaller sensors offering a similar number of pixels. As a consequence, the pixels are much smaller, which is a key reason for the image quality difference, especially in terms of noise and dynamic range.

Sensor Type Designation

Sensors are often referred to with a "type" designation using imperial fractions such as 1/1.8" or 2/3" which are larger than the actual sensor diameters. The type designation harks back to a set of standard sizes given to TV camera tubes in the 50's. These sizes were typically 1/2", 2/3" etc. The size designation does not define the diagonal of the sensor area but rather the outer diameter of the long glass envelope of the tube. Engineers soon discovered that for various reasons the usable area of this imaging plane was approximately two thirds of the designated size. This designation has clearly stuck (although it should have been thrown out long ago). There appears to be no specific mathematical relationship between the diameter of the imaging circle and the sensor size, although it is always roughly two thirds.

Common Image Sensor Sizes

In the table below "Type" refers to the commonly used type designation for sensors, "Aspect Ratio" refers to the ratio of width to height, "Dia." refers to the diameter of the tube size (this is simply the Type converted to millimeters), "Diagonal / Width / Height" are the dimensions of the sensors image producing area.

|

Sensor (mm) |

|||||

|

Type |

Aspect Ratio |

Dia. (mm) |

Diagonal |

Width |

Height |

|

1/3.6" | |||||

|

1/3.2" | |||||

|

1/3" | |||||

|

1/2.7" | |||||

|

1/2.5" | |||||

|

1/2" | |||||

|

1/1.8" | |||||

|

2/3" | |||||

|

1" |

| ||||

|

4/3" | |||||

|

35 mm film |

n/a | ||||

Implementation Examples

Below is a list of a few digital cameras (as examples) and their sensor size.

|

Camera |

Sensor Type |

Pixel count |

Sensor size |

|

Konika Minolta DiMAGE Xg |

1/2.7" CCD |

3.3 million |

5.3 x 4.0 mm |

|

PowerShot S500 |

1/1.8" CCD |

5.0 million |

7.2 x 5.3 mm |

|

Nikon Coolpix 8700 |

2/3" CCD |

8.0 million |

8.8 x 6.6 mm |

|

|

2/3" CCD |

8.0 million |

8.8 x 6.6 mm |

|

Sony DSC-828 |

2/3" CCD |

8.0 million |

8.8 x 6.6 mm |

|

Konica Minolta Dimage A2 |

2/3" CCD |

8.0 million |

8.8 x 6.6 mm |

|

Nikon D70 |

CCD |

6.1 million |

23.7 x 15.6 mm |

|

Canon EOS-1Ds |

CMOS |

11.4 million |

36 x 24 mm |

|

Kodak DSC-14n |

CMOS |

13.8 million |

36 x 24 mm |

Camera System - Sensors

The New Foveon Sensors

The cone-shaped

cells inside our eyes are sensitive to red, green, and blue-the "primary

colors". We perceive all other colors as combinations of these primary

colors. In conventional photography, the red, green, and blue components of

light expose the corresponding chemical layers of color film. The new Foveon

sensors are based on the same principle, and have three sensor layers that

measure the primary colors, as shown in this diagram. Combining these color

layers results in a digital image, basically a mosaic of square tiles or "pixels"

of uniform color which are so tiny that it appears uniform and smooth.

As a relatively new technology, Foveon sensors are currently only available in

the Sigma SD9 and SD10 digital SLRs and have drawbacks such as relatively

low-light sensitivity.

The Current Color Filter Array Sensors

All other digital camera sensors only measure the brightness of each pixel. As shown in this diagram, a "color filter array" is positioned on top of the sensor to capture the red, green, and blue components of light falling onto it. As a result, each pixel measures only one primary color, while the other two colors are "estimated" based on the surrounding pixels via software. These approximations reduce image sharpness, which is not the case with Foveon sensors. However, as the number of pixels in current sensors increases, the sharpness reduction becomes less visible. Also, the technology is in a more mature stage and many refinements have been made to increase image quality.

Active Pixel Sensors (CMOS, JFET LBCAST) versus CCD Sensors

Similar to an

array of buckets collecting rain water, digital camera sensors consist of an

array of "pixels" collecting photons, the minute energy packets of

which light consists. The number of photons collected in each pixel is

converted into an electrical charge by the photodiode. This charge is then

converted into a voltage, amplified, and converted to a digital value via the analog

to digital converter, so that the camera can process the values into

the final digital image.

In CCD (Charge-Coupled Device) sensors, the pixel measurements are processed

sequentially by circuitry surrounding the sensor, while in APS (Active Pixel

Sensors) the pixel measurements are processed simultaneously by circuitry

within the sensor pixels and on the sensor itself. Capturing images with CCD

and APS sensors is similar to image generation on CRT and LCD monitors

respectively.

The most common type of APS is the CMOS (Complementary Metal Oxide

Semiconductor) sensor. CMOS sensors were initially used in low-end cameras but

recent improvements have made them more and more popular in high-end cameras

such as the Canon EOS D60 and 10D. Moreover, CMOS sensors are faster, smaller,

and cheaper because they are more integrated (which makes them also more

power-efficient), and are manufactured in existing computer chip plants. The

earlier mentioned Foveon sensors are also based on CMOS technology. Nikon's new

JFET LBCAST sensor is an APS using JFET (Junction Field Effect Transistor)

instead of CMOS transistors.

Camera System - Thumbnail Index

When in playback mode, most digital cameras allow you to access the images and video clips on the storage card via a thumbnail index, an interactive contact sheet. Mostly a 2 x 2 or 3 x 3 grid of images is used, and sometimes this can be specified by the user. Buttons on the camera allow you to navigate through the thumbnails or select them and, depending on the camera, perform basic operations such as hiding, deleting, organizing them into folders, view them as a slideshow, print directly from the camera, etc. Clicking on the thumbnails will allow you to see a larger thumbnail of the image that fills the whole LCD. Read more in the LCD topic of this glossary.

Typical 3 x 3 thumbnail index on a Menu that allows you

digital camera. to choose what you

want to do with the selected image(s).

Camera System - Viewfinder

The viewfinder is the "window" you look through to compose the scene. We will discuss the four types of viewfinder commonly found on digital cameras.

Optical Viewfinder on a Digital Compact Camera

The optical viewfinder on a digital compact camera consists of a simple optical system that zooms at the same time as the main lens and has an optical path that runs parallel to the camera's main lens. These viewfinders are small and their biggest problem is framing inaccuracy. Since the viewfinder is positioned above the actual lens (often there is also a horizontal offset), what you see through the optical viewfinder is different from what the lens projects onto the sensor. This "parallax error" is most obvious at relatively small subject distances. In many instances the optical viewfinder only allows you to see a percentage (80 to 90%) of what the sensor will capture. For more accurate framing, it is recommended to use the LCD instead. For those who wear corrective glasses it's worth checking to see if the viewfinder has any diopter adjustment.

Because the optical path of the Sometimes optical viewfinders

viewfinder runs parallel to the have parallax error lines on them

camera's main lens, what you to indicate what the sensor will

see is different from what the see at relatively small subject

lens projects onto the sensor. distances (e.g. below 1.5 meter or 5 feet).

LCD on a Digital Compact Camera (TTL)

The LCD on a digital compact camera shows in real time what is projected onto the sensor by the lens and therefore avoids the above parallax errors. This is also called "TTL" or "Through-The-Lens" viewing. Using the LCD for framing will shorten battery life and it may be difficult to frame accurately in very bright sunlight conditions, in which case you will have to resort to the optical or electronic viewfinder (see below). The LCDs on virtually all digital SLRs will only show the image after it is taken and give no live previews.

Example of digital compact with a twist LCD

Example of digital compact with a twist LCD

Optical Viewfinder on a Digital SLR Camera (TTL)

The optical viewfinder of a digital SLR shows what the lens will project on the sensor via a mirror and a prism and has therefore no parallax error. When you depress the shutter button, the mirror flips up so the lens can expose the sensor. As a consequence, and due to sensor limitations, the LCD on most digital SLRs will only show the image after it is taken and give no live previews. In some models this is resolved by replacing the mirror by a prism (at the expense of incoming light). The optical viewfinder normally also features an LCD "status bar" along the bottom of the viewfinder relaying exposure and camera setting information.

The optical TTL viewfinder allows Optical TTL viewfinder on SLR

you to look "through the lens" with diopter adjustment (slider

on the right side)

Electronic Viewfinder (EVF) on a Digital Compact Camera (TTL)

An electronic viewfinder (EVF) functions like the LCD on a digital compact camera and shows in real time what is projected onto the sensor by the lens. It is basically a small LCD (typically measuring 0.5" diagonally and 235,000 pixels) with a lens in front of it, which allows you to frame more accurately, especially in bright sunlight. It simulates in an electronic way the effect of the (superior) optical TTL viewfinders found on digital SLRs and doesn't suffer from parallax errors. Cameras with an EVF have an LCD as well, but no true optical viewfinder.

Example of an electronic viewfinder

Example of an electronic viewfinder

Camera System - Storage Card

Storage cards are to digital cameras what films are to conventional cameras. They are removable devices which hold the images taken with the camera. Storage cards are keeping up with the rapidly changing digital camera market and are trending in the following direction:

The only downside of all this good news is a proliferation of storage card formats, making it more difficult to use cards across different cameras, card readers, and other devices (such as PDAs, MP3 players, etc). The image and table below give you an idea of how the sizes of typical formats compare:

|

Card Type |

Dimensions in mm |

Volume in mm³ |

|

CompactFlash II / Microdrive |

42.8 x 36.4 x 5.0 | |

|

CompactFlash I |

42.8 x 36.4 x 3.3 | |

|

Memory Stick |

50.0 x 21.5 x 2.8 | |

|

Secure Digital |

32.0 x 24.0 x 2.1 | |

|

SmartMedia |

45.0 x 37.0 x 0.8 | |

|

MultiMediaCard |

32.0 x 24.0 x 1.4 | |

|

Memory Stick Duo |

31.0 x 20.0 x 1.6 | |

|

xD Picture Card |

25.0 x 20.0 x 1.7 | |

|

Reduced Size MultiMediaCard |

18.0 x 24.0 x 1.4 |

CompactFlash

CompactFlash is a proven and reliable format compatible with many devices and generally ahead of other formats in terms of storage capacity. Capacities above 2.2 GB require that your camera supports "FAT32". CompactFlash comes in Type I and II which only differ in thickness (3.3mm and 5.0mm) with Type I being the most popular for flash memory, while Type II is used by microdrives.

Microdrives

Pioneered by IBM, microdrives are minute hard disks that come in CompactFlash Type II format and typically offer larger storage capacities at a cheaper cost per megabyte. However, CompactFlash has been catching up with higher capacity cards. Microdrives use more battery power, create more heat (which can result in more noise) and have a higher risk of failure because they contain moving parts.

SmartMedia

Bigger in surface than CompactFlash but much thinner, they are more fragile and known to be less reliable. This format is gradually being phased out of the market with virtually no new cameras being announced supporting this format.

Sony Memory Stick

Yet another standard, set by Sony but now also manufactured by others such as Lexar Media. The main drawback is that there are fewer cameras using this type of memory, although their number is gradually increasing. So if you buy another brand of camera later on, you may not be able to use your memory sticks. Memory sticks are more expensive per megabyte because there is less competition in the market. Although their capacity continues to increase, they tend to lag behind CompactFlash in terms of maximum capacity. Several variants exist such as Sony Memory Stick with Select Function, Sony Memory Stick Pro, Sony Memory Stick Duo, and Sony MagicGate.

Secure Digital (SD)

Supported by the SD Card Association (SDA), this compact type of memory card allows for fast data transfer and has built-in security functions to facilitate the secure exchange of content and includes copyright (music) protection which makes them more expensive than the similar MultiMediaCards which we will discuss next. SD cards have a small write-protection switch on the side, similar to what floppy disks have.

MultiMediaCard/SecureMultiMediaCard/Reduced Size MultiMediaCard (MMC/SecureMMC/RS-MMC)

Supported by the MultiMediaCard Association (MMCA), MultiMediaCards have the same surface but are 0.7mm thinner than SD cards and have two pins less. Hardware-wise MMC cards fit in SD card slots and many, but not all, SD devices and cameras will accept MMC cards as well. Check out the specs before you buy. Two variants are SecureMMC, similar to SD, and Reduced Size MMC.

xD Picture Card

Another format aimed at very small digital

cameras, developed by

Other Formats

Older formats include floppy disks and PCMCIA cards. A few models support writing on to 3-inch CD-R/RW discs. Some low-end cameras don't have removable storage cards but instead have built-in flash RAM memory.

Digital Imaging - Aliasing

Aliasing refers to the jagged appearance of diagonal lines, edges of circles, etc. due to the square nature of pixels, the building blocks of digital images.

|

Term |

|

Enlarged View (4X) |

Comment |

|

Aliased |

|

|

Steps or "jaggies" are visible, especially when magnifying the image. |

|

Anti-aliased |

|

|

Anti-aliasing makes the edges look much smoother at normal magnifications. |

Anti-aliasing

Anti-aliasing makes the edges appear much smoother by averaging out the pixels around the edge. In this example some blue is added to the yellow edge pixels and some yellow is added to the blue edge pixels, thereby making the transition between the yellow circle and the blue background more gradual and smooth. Most image editing software packages have "anti-aliasing" options for typing fonts, drawing lines and shapes, making selections, etc. Anti-aliasing also occurs naturally in digital camera images and smoothens out the "jaggies".

Digital Imaging - Artifacts

Artifacts refer to a range of undesirable changes to a digital image caused by the sensor, optics, and internal image processing algorithms of the camera. The table below lists some of the common digital imaging artifacts and links to the corresponding glossary items.

|

|

Blooming |

|

Maze Artifacts |

|

|

Chromatic Aberrations |

|

Moiré |

|

|

Jaggies |

|

Noise |

|

|

JPEG Compression |

|

Sharpening Halos |

Digital Imaging - Blooming

A pixel on a digital camera sensor collects photons which are converted into an electrical charge by its photodiode. As explained in the dynamic range topic, once the "bucket" is full, the charge caused by additional photons will overflow and have no effect on the pixel value, resulting in a clipped or overexposed pixel value. Blooming occurs when this charge flows over to surrounding pixels, brightening or overexposing them in the process. In the example below, the charge overflow of the overexposed pixels in the sky causes the dark pixels at the edges of the leaves and branches to be brightened and overexposed as well. As a result detail is lost. Blooming can also increase the visibility of chromatic aberrations.

Some sensors come with "anti-blooming gates" which drain away the overflowing charge so it does not affect the surrounding pixels, except for extreme exposures (very bright edge against a virtually black edge).

Digital Imaging - Color Spaces

The Additive RGB Colors

The cone-shaped cells inside our eyes are sensitive to red, green, and blue. We perceive all other colors as combinations of these three colors. Computer monitors emit a mix of red, green, and blue light to generate various colors. For instance, combining the red and green "additive primaries" will generate yellow. The animation below shows that if adjacent red and green lines (or dots) on a monitor are small enough, their combination will be perceived as yellow. Combining all additive primaries will generate white.

|

|

|

|

The Additive RGB Color Space |

|

The Subtractive CMYk Colors

A print emits light indirectly by reflecting light that falls upon it. For instance, a page printed in yellow absorbs (subtracts) the blue component of white light and reflects the remaining red and green components, thereby creating a similar effect as a monitor emitting red and green light. Printers mix Cyan, Magenta, and Yellow ink to create all other colors. Combining these subtractive primaries will generate black, but in practice black ink is used, hence the term "CMYk" color space, with k standing for the last character of black.

|

|

|

|

The Subtractive CMYk Color Space |

|

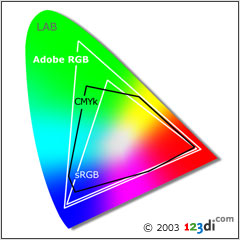

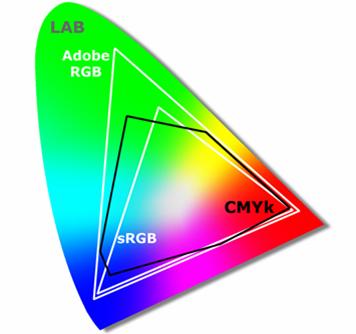

The LAB and Adobe RGB (1998) Color Spaces

Due to technical limitations,

monitors and printers are unable to reproduce all the colors we can see with

our eyes, also called the "LAB" color space, symbolized by the

horseshoe shape in the diagram below. The group of colors an average computer

monitor can replicate is called the (additive) sRGB color space. The group of

colors a printer can generate is called the (subtractive) CMYk color space.

There are many types of CMYk, depending on the device. From the diagram you can

see that certain colors are not visible on an average computer monitor but

printable by a printer and vice versa. Higher-end digital cameras allow you to

shoot in Adobe RGB (1998), which is larger than sRGB and CMYk. This will allow

for prints with a wider range of colors. However, most monitors are only able

to display colors within sRGB.

Due to technical limitations,

monitors and printers are unable to reproduce all the colors we can see with

our eyes, also called the "LAB" color space, symbolized by the

horseshoe shape in the diagram below. The group of colors an average computer

monitor can replicate is called the (additive) sRGB color space. The group of

colors a printer can generate is called the (subtractive) CMYk color space.

There are many types of CMYk, depending on the device. From the diagram you can

see that certain colors are not visible on an average computer monitor but

printable by a printer and vice versa. Higher-end digital cameras allow you to

shoot in Adobe RGB (1998), which is larger than sRGB and CMYk. This will allow

for prints with a wider range of colors. However, most monitors are only able

to display colors within sRGB.

Digital Imaging - Compression

Image files can be compressed in two ways: lossless and lossy.

Lossless Compression

Lossless compression is similar to what WinZip does. For instance, if you compress a document into a ZIP file and later extract and open the document, the content will of course be identical to the original. No information is lost in the process. Only some processing time was required to compress and decompress the document. TIFF is an image format that can be compressed in a lossless way.

Lossy Compression

Lossy compression reduces the image size by discarding information and is similar to summarizing a document. For example, you can summarize a 10 page document into a 9 page or 1 page document that represents the original, but you cannot create the original out of the summary as information was discarded during summarization. JPEG is an image format that is based on lossy compression.

A Numerical Example

The table below shows how, on average, a five megapixel image (2,560 x 1,920 pixels) is compressed using the various image formats which are discussed in this glossary. Please note that in reality, the compressed file sizes will vary significantly with the amount of detail in the image. For example, the table shows 1.3 MB as file size for an 80% Quality JPEG five megapixel image. However, if the image has a lot of uniform surfaces (e.g. blue skies), it could be only 0.8 MB at 80% JPEG quality, and if it has a lot of fine detail, it could be 1.7 MB. The purpose of this table is to give a ballpark estimate.

|

Image Format |

Typical File Size in MB |

Comment |

|

Uncompressed TIFF |

3 channels of 8 bits |

|

|

Uncompressed 12-bit RAW |

1 channel of 12 bits |

|

|

Compressed TIFF |

Lossless compression |

|

|

Compressed 12-bit RAW |

Lossless compression |

|

|

100% Quality JPEG |

Hard to distinguish from uncompressed |

|

|

80% Quality JPEG |

Sufficient quality for 4" x 6" prints |

|

|

60% Quality JPEG |

Sufficient quality for websites * |

|

|

20% Quality JPEG |

Very low image quality |

For the web you would of course downsample the image to a lower resolution.

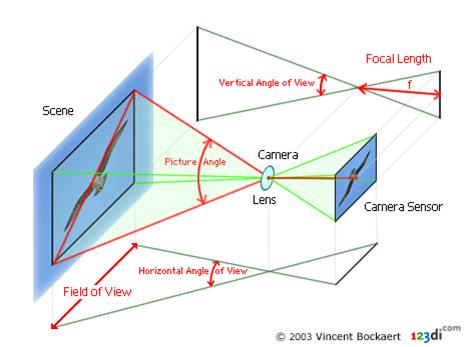

Digital Imaging - Digital Zoom

Optical zoom is the magnification factor between the minimum and maximum focal lengths of the lens. Consumer and prosumer cameras often come also with a digital zoom, which we will discuss based on an example of a 5 megapixel prosumer camera.

|

|

|

|

A. Scene shot with a 31mm lens |

B. Scene shot with a 50mm lens |

|

Changing the focal length from 31mm to 50mm (50/31=1.6X optical zoom) reduces the field of view. In image B, the sensor captures the red zone indicated in image A. In both cases the camera will store 5 megapixel of information into a 5 megapixel image. |

|

|

|

|

|

C. 1.6X Digital

Zoom |

D. 1.6X Digital

Zoom |

|

A 1.6X digital zoom will only use the information of a 1,600 x 1,200 crop and discard the rest (2,560/1.6=1,600 and 1,920/1.6=1,200). In image C, the camera has captured the same field of view as in image B but only uses 2 megapixel out of the 5 megapixel resolution! If the digital camera has the option to output 1,600 x 1,200 images, the crop will be saved as a 2 megapixel image. In most cases, the 1,600 x 1,200 crop will be upsampled to the full resolution of the camera as indicated in image D. No additional information is created in the process and the quality of image D is clearly lower than image B. |

|

To Use Or Not to Use Digital Zoom

So what is the best thing to do? If your purpose is to capture the information shown in image B, using a lens with focal length of 50mm is of course the best option. If you only have a 31mm lens available (or in general, if you reached the maximum optical zoom and need to zoom in more) there are three things you can do:

* If for some reason your intention is to upsample and you are shooting in JPEG, one benefit of digital zoom is that the upsampling in the camera is done before JPEG compression. If you shoot A, crop the 1,600 x 1,200 area, and then upsample to 2,560 x 1,920 on your computer, you will magnify the JPEG compression artifacts and the upsampled image will look not as good as image D. Because not all digital zooms are created equally, you may want to verify the quality differences with your particular digital camera before using digital zoom for this purpose.

Digital Imaging -

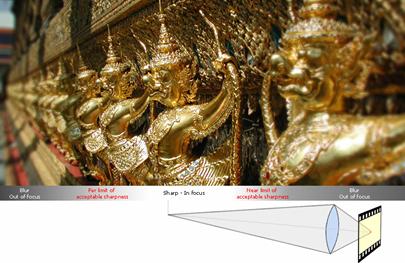

If you come out of a dark room and suddenly face bright sunlight, your eyes "hurt" initially. Or if you suddenly enter a dark room, it will take a while before you start to see anything as it takes some time for your eyes to adjust their sensitivity. Similarly a camera sensor has difficulty capturing bright and dark areas at the same time. Cameras with a large dynamic range are able to capture subtle tonal gradations in the shadow, midtone, and highlight areas of the scene. In technical terms, dynamic range is defined by the ratio of the highest non-white value and smallest non-black value a sensor can capture at the same time.

Pixel Size and

We learned earlier that a digital camera sensor has millions of pixels collecting photons during the exposure of the sensor. You could compare this process to millions of tiny buckets collecting rain water. The brighter the captured area, the more photons are collected. After the exposure, the level of each bucket is assigned a discrete value as is explained in the analog to digital conversion topic. Empty and full buckets are assigned values of "0" and "255" respectively, and represent pure black and pure white, as perceived by the sensor. The conceptual sensor below has only 16 pixels. Those pixels which capture the bright parts of the scene, get filled up very quickly.

Once they are full, they overflow (this can also

cause blooming). What flows over gets lost, as

indicated in red, and the values of these buckets all become 255, while they

actually should have been different. In other words, detail is lost. This

causes "clipped highlights" as explained in the histogram

section. On the other hand, if you reduce the exposure time to prevent further

highlight clipping, as we did in the above example, then many of the pixels

which correspond to the darker areas of the scene may not have had enough time

to capture any photons and might still have value zero (hence the term

"clipped shadows" as all the values are zero, while in reality there

might be minor differences).

It is easy to understand that one of the reasons digital SLRs have a larger

dynamic range is that their pixels are larger. Larger pixels do not

"fill up" so quickly, so there is more time to capture the dark

pixels before the bright ones start to overflow.

Some

|

|

|

|

The dynamic range of the camera was able to capture the dynamic range of the scene. The histogram indicates that both shadow and higlight detail is captured. |

|

|

|

|

|

Here the dynamic range of the camera was smaller than the dynamic range of the scene. The histogram indicates that some shadow and highlight detail is lost. |

|

|

|

|

|

The limited dynamic range of this camera was used to capture highlight detail at the expense of shadow detail. The short exposure needed to prevent the highlight buckets from overflowing gave some of the shadow buckets is insufficient time to capture any photons. |

|

|

|

|

|

The limited dynamic range of this camera was used to capture shadow detail at the expense of highlight detail. The long exposure needed by the shadow buckets to collect sufficient photons resulted in overflowing of some of the highlight buckets. |

|

|

|

|

|

Here the dynamic range of the scene is smaller than the dynamic range of the camera, typical when shooting images from an airplane.The histogram can be stretched to cover the whole tonal range with a more contrasty image as a result, but posterization can occur. |

|

Summary

Cameras with a large dynamic range are able to capture shadow detail and highlight detail at the same time.

Digital Imaging - Gamma

Each pixel

in a digital image has a certain level of brightness ranging from black (0) to

white (1). These pixel values serve as the input for your computer monitor. Due

to technical limitations, CRT monitors output these values in a nonlinear way:

Output = Input Gamma

When unadjusted, most CRT monitors have a "gamma" of 2.5 which means

that pixels with average brightness of 0.5, will be displayed with a brightness

of 0.5^2.5 or 0.18, much darker. LCDs tend to have rather irregularly shaped

output curves. Calibration via software and/or hardware ensures that the

monitor outputs the image based on a predetermined gamma curve.

When gamma=1, the monitor respond in a linear way (Output = Input), but images will appear "flat" and overly bright, the other extreme. In reality, a gamma of around 2.0 will create a more desirable output which is neither too bright nor too dark and pleasing to our vision (which is non-linear as well). Windows and Mac computers use gammas of 2.2 and 1.8 respectively.

|

Linear Gamma 1.0 |

Nonlinear Gamma 2.2 |

Nonlinear Gamma 2.5 |

|

|

|

|

|

Input 0.5 -> Output 0.5 |

Input 0.5 -> Output 0.22 |

Input 0.5 -> Output 0.18 |

|

|

|

|

|

Image looks too bright and "flat" |

Image looks contrasty and pleasing to the eye |

Image looks too dark (exaggerated example) |

Digital Imaging - Histogram

Histograms are the key to understanding digital images. This 10x4 mosaic contains 40 tiles which we could sort by color and then stack up accordingly. The higher the pile, the more tiles of that color in the mosaic. The resulting "histogram" would represent the color distribution of the mosaic.

In the sensor topic we learned that a digital image is basically a mosaic of square tiles or "pixels" of uniform color which are so tiny that it appears uniform and smooth. Instead of sorting them by color, we could sort these pixels into 256 levels of brightness from black (value 0) to white (value 255) with 254 gray levels in between. Just as we did manually for the mosaic, an imaging software automatically sorted the pixels of the image below into 256 groups (levels) of "brightness" and stacked them up accordingly. The height of each "stack" or vertical "bar" tells you how many pixels there are for that particular brightness. "0" and "255" are the darkest and brightest values, corresponding to black and white respectively.

On this histogram each "stack" or "bar" is one pixel wide. Unlike the mosaic histograms, the 256 bars are stacked side by side without any space between them, which is why for educational purposes, the vertical bars are shown in alternating shades of gray, allowing you to distinguish the individual bars. There are no blank spaces between bars to avoid confusion with blank spaces caused by missing tones in the image. Normally all bars will be black as indicated in the second histogram.

Typical Histogram Examples

|

|

|

|

Correctly exposed image |

This is an example of a correctly exposed image with a "good" histogram. The smooth curve downwards ending in 255 shows that the subtle highlight detail in the clouds and waves is preserved. Likewise, the shadow area starts at 0 and builds up gradually. |

|

|

|

|

Underexposed image |

The histogram indicates there are a lot of pixels with value 0 or close to 0, which is an indication of "clipped shadows". Some shadow detail is lost forever as explained in the dynamic range topic. Unless there is a lot of pure black in the image, there should not be that many pure black pixels. There are also very few pixels in the highlight area. |

|

|

|

|

Overexposed image |

The histogram indicates there are a lot of pixels with value 255 or close to 255, which is an indication of "clipped highlights". Subtle highlight detail in the clouds and waves is lost. There are also very few pixels in the shadow area. |

|

|

|

|

Image with too much contrast |

This image has both clipped shadows and highlights. The dynamic range of the scene is larger than the dynamic range of the camera. |

|

|

|

|

Image with too little contrast |

This image only contains midtones and lacks contrast, resulting in a hazy image. |

|

|

|

|

Image with modified contrast |

When "stretching" the above histogram via a Levels or Curves adjustment, the contrast of the image improves, but since the tones are redistributed over a wider tonal range, some tones are missing, as indicated in this "combed" histogram. Too much combing can lead to posterization as shown in the example below. |

|

|

|

|

Image with posterization |

Too much combing can lead to "posterization" as shown in this exaggerated conceptual example. |

Keeping an Eye on the Histograms when Taking Pictures

|

|

|

Example of camera histogram review with overexposure warning |

Most prosumer cameras and all professional cameras allow you to view the histogram on the camera's LCD so you can adjust the exposure and take the shot again if necessary. Some cameras come with an overexposure warning, whereby the overexposed areas blink, as indicated in this animation. In certain cameras the blinking areas are not necessarily overexposed, but an indication of potential overexposure.

Keeping an Eye on the Histograms when Editing

When editing images, it is important to keep an eye on the histogram to avoid the above mentioned shadow and highlight clipping and posterization. The new Adobe Photoshop CS now comes with a live histogram palette, as stated in my Photoshop CS review.

Summary

It is essential to keep an eye on the histogram when taking pictures and when editing them to ensure proper exposure and avoid losing shadow and highlight detail.

Digital Imaging - Interpolation

Interpolation (sometimes called resampling) is an imaging method to increase (or decrease) the number of pixels in a digital image. Some digital cameras use interpolation to produce a larger image than the sensor captured or to create digital zoom. Virtually all image editing software support one or more methods of interpolation. How smoothly images are enlarged without introducing jaggies depends on the sophistication of the algorithm.

The examples below are all 450% increases in size of this 106 x 40 crop from an image.

![]()

Nearest Neighbor Interpolation

Nearest neighbor interpolation is the simplest method and basically makes the pixels bigger. The color of a pixel in the new image is the color of the nearest pixel of the original image. If you enlarge 200%, one pixel will be enlarged to a 2 x 2 area of 4 pixels with the same color as the original pixel. Most image viewing and editing software use this type of interpolation to enlarge a digital image for the purpose of closer examination because it does not change the color information of the image and does not introduce any anti-aliasing. For the same reason, it is not suitable to enlarge photographic images because it increases the visibility of jaggies.

Nearest Neighbor Interpolation

Bilinear Interpolation

Bilinear Interpolation determines the value of a new pixel based on a weighted average of the 4 pixels in the nearest 2 x -2 neighborhood of the pixel in the original image. The averaging has an anti-aliasing effect and therefore produces relatively smooth edges with hardly any jaggies.

Bilinear Interpolation

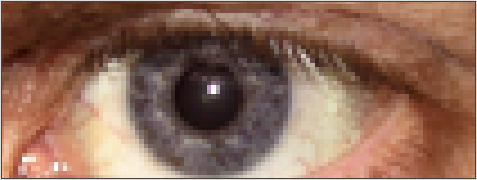

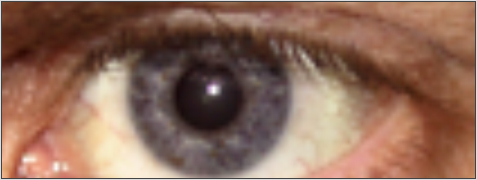

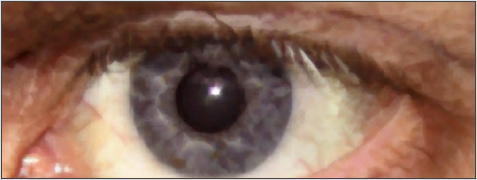

Bicubic interpolation

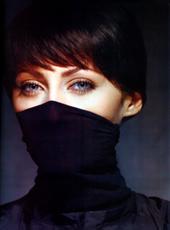

Bicubic interpolation is more sophisticated and produces smoother edges than bilinear interpolation. Notice for instance the smoother eyelashes in the example below. Here, a new pixel is a bicubic function using 16 pixels in the nearest 4 x 4 neighborhood of the pixel in the original image. This is the method most commonly used by image editing software, printer drivers and many digital cameras for resampling images. As mentioned in my review, Adobe Photoshop CS offers two variants of the bicubic interpolation method: bicubic smoother and bicubic sharper.

Bicubic Interpolation

Bicubic Smoother Bicubic Bicubic Sharper

Fractal interpolation

Fractal interpolation is mainly useful for extreme enlargements (for large prints) as it retains the shape of things more accurately with cleaner, sharper edges and less halos and blurring around the edges than bicubic interpolation would do. An example is Genuine Fractals Pro from The Altamira Group.

Fractal Interpolation

There are of course many other methods of interpolation but they're seldom seen outside of more sophisticated image manipulation packages.

Digital Imaging - Jaggies

Hardly a technical term, jaggies refer to the visible "steps" of diagonal lines or edges in a digital image. Also referred to as "aliasing", these steps are simply a consequence of the regular, square layout of a pixel.

Increasing Resolution Reduces the Visibility of Jaggies

Jaggies become less visible as the sensor or image resolution increases. The crops below are from pictures of a flower against a blue sky taken with digital cameras with different resolutions*. The low resolution cameras show very visible jaggies. As we increase the camera resolution from A to D, the steps become almost invisible in crop D. But they are still present when the image is enlarged, as shown in crop E.

|

|

|

|

|

|

|

A. |

B. |

C. |

D. |

E. Red zone in D |

* Simulated results, only crop D is from a real camera.

Anti-aliasing Reduces the Visibility of Jaggies

Digital camera images undergo natural anti-aliasing because the pixels that measure the edges receive information from both sides of the edge. In this example the pixels that measure the yellow edge of the flower will also measure some blue sky resulting in values that are somewhere between yellow and blue. This makes the edges softer than in theoretical example F which has no anti-aliasing.

|

|

|

|

E. Red zone in D 8X enlarged |

F. No anti-aliasing |

If the sensor has a color filter array, the interpolation of the missing information (demosaicing) uses information of surrounding pixels and will therefore cause additional anti-aliasing.

Sharpening Increases the Visibility of Jaggies

Sharpening will increases edge-contrast (reduce anti-aliasing) and make jaggies more visible, as shown in the sharpening topic. For the same reason, the jaggies in this rooftop against a bright sky are visible because the contrast of the image made the edge sharper.

Digital Imaging - Jpeg

The most commonly used digital image format is JPEG (Joint Photographic Experts Group). Universally compatible with browsers, viewers, and image editing software, it allows photographic images to be compressed by a factor 10 to 20 compared to the uncompressed original with very little visible loss in image quality.

The Theory in a Nutshell

In a nutshell, JPEG rearranges the image information into color and detail information, compressing color more than detail because our eyes are more sensitive to detail than to color, making the compression less visible to the naked eye. Secondly, it sorts the detail information into fine and coarse detail and discards the fine detail first because our eyes are more sensitive to coarse detail than to fine detail. This is achieved by combining several mathematical and compression methods which are beyond the scope of this glossary but explained in detail in my e-book.

A Practical Example

JPEG allows you to make a trade-off between image file size and image quality. JPEG compression divides the image in squares of 8 x 8 pixels which are compressed independently. Initially these squares manifest themselves through "hair" artifacts around the edges. Then, as you increase the compression, the squares themselves will become visible, as shown in the examples below, which are magnified by a factor 2.

|

|

100% Quality JPEG is very hard to distinguish from the uncompressed original which would typically take up 6 times more storage space. |

||||||||

|

|

80% Quality JPEG looks still very good, especially when bearing in mind that this crop is 2 times enlarged and that the file size is typically 10 times smaller than the uncompressed original. Notice some deterioration along the edges of the yellow crayon. Most digital cameras will use a higher quality level than 80% as their highest quality JPEG setting. |

||||||||

|

|

|

||||||||

|

|

10% Quality JPEG shows serious image degradation with very visible 8 x 8 JPEG squares. The only benefit of this low quality level is that it illustrates what JPEG is doing in a more subtle way at higher quality levels. It is unlikely you will ever compress this aggressively. The example also shows that compression is most visible around the edges. |

Practical Tips

Cameras usually have different JPEG quality

settings, such as FINE,

The compression topic shows some numerical examples of file sizes.

Digital Imaging - Moiré

If the subject has more detail than the resolution of the camera*, a wavy moiré pattern can appear as shown in crop A. There is no moiré in crop B of an image of the same scene taken with a camera with a higher resolution. Anti-alias** filters reduce or eliminate moiré but also reduce image sharpness.

|

|

|

|

A. Example of moiré waves. |

B. No moiré in this crop taken with a higher resolution camera. |

Maze Artifacts

Sometimes, moiré can cause the camera's internal image processing to generate "maze" artifacts.

|

|

|

Example of maze artifacts |

Technical footnotes for advanced users:

![]() In technical terms this means that the spatial

frequency of the subject is higher than the resolution

of the camera which we defined by the Nyquist frequency. This causes lower

harmonics to appear (frequency aliasing) in the form of moiré waves.

In technical terms this means that the spatial

frequency of the subject is higher than the resolution

of the camera which we defined by the Nyquist frequency. This causes lower

harmonics to appear (frequency aliasing) in the form of moiré waves.

![]() They are named anti-alias filters because they

reduce "frequency aliasing" mentioned in the above footnote. Because

anti-alias filters tend to soften images, they incidentally have an indirect

"image anti-aliasing" effect, but that

is not the reason they are named this way.

They are named anti-alias filters because they

reduce "frequency aliasing" mentioned in the above footnote. Because

anti-alias filters tend to soften images, they incidentally have an indirect

"image anti-aliasing" effect, but that

is not the reason they are named this way.

Digital Imaging - Noise Reduction

For the past 4 years I have spent hundreds of hours researching methods to reduce noise from digital camera images. The key to noise reduction is to reduce or eliminate the noise without deteriorating other aspects of the image. Many freeware and even paid solutions negatively affect image sharpness, introduce wavy patterns in uniform surfaces and/or make them look "too uniform" (a bit like in a water painting).

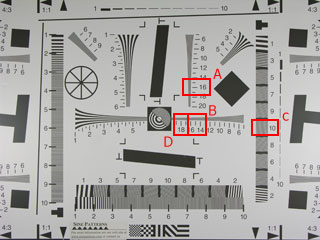

These crops below out of a prosumer image illustrates the problem of edge sharpness and wavy patterns typical to a lot of noise reduction methods and compares the results with methods described in my e-book. The results are shown both for the color image and in the red channel. The areas indicated by the red squares are 4 times enlarged in the row below. On some monitors, the noise may not be very visible in the original. In that case, look at the red channel crops instead.

|

Original |

Bad Noise Reduction |

Good Noise Reduction (123di) |

|

|

Original crops (1X) and enlarged red squares (4X) below |

|||

|

|

|

|

|

|

|

|

|

|

|

Notice the red color noise in the blue sky of the original, more visible in the red channel (*). |

Bad noise reduction methods remove noise but blur the edge as shown in the 4X crop. |

Good noise reduction methods remove noise but preserve edge sharpness. |

|

|